Neural correlates of consciousness

The neural correlates of consciousness (NCC) constitute the minimal set of neuronal events and mechanisms sufficient for a specific conscious percept.[2] Neuroscientists use empirical approaches to discover neural correlates of subjective phenomena; that is, neural changes which necessarily and regularly correlate with a specific experience.[3][4] The set should be minimal because, under the assumption that the brain is sufficient to give rise to any given conscious experience, the question is which of its components is necessary to produce it.

Neurobiological approach to consciousness

A science of consciousness must explain the exact relationship between subjective mental states and brain states, the nature of the relationship between the conscious mind and the electro-chemical interactions in the body (mind–body problem). Progress in neuropsychology and neurophilosophy has come from focusing on the body rather than the mind. In this context the neuronal correlates of consciousness may be viewed as its causes, and consciousness may be thought of as a state-dependent property of some undefined complex, adaptive, and highly interconnected biological system.[5]

Discovering and characterizing neural correlates does not offer a theory of consciousness that can explain how particular systems experience anything at all, or how and why they are associated with consciousness, the so-called hard problem of consciousness,[6] but understanding the NCC may be a step toward such a theory. Most neurobiologists assume that the variables giving rise to consciousness are to be found at the neuronal level, governed by classical physics, though a few scholars have proposed theories of quantum consciousness based on quantum mechanics.[7]

There is great apparent redundancy and parallelism in neural networks so, while activity in one group of neurons may correlate with a percept in one case, a different population might mediate a related percept if the former population is lost or inactivated. It may be that every phenomenal, subjective state has a neural correlate. Where the NCC can be induced artificially the subject will experience the associated percept, while perturbing or inactivating the region of correlation for a specific percept will affect the percept or cause it to disappear, giving a cause-effect relationship from the neural region to the nature of the percept.

What characterizes the NCC? What are the commonalities between the NCC for seeing and for hearing? Will the NCC involve all the pyramidal neurons in the cortex at any given point in time? Or only a subset of long-range projection cells in the frontal lobes that project to the sensory cortices in the back? Neurons that fire in a rhythmic manner? Neurons that fire in a synchronous manner? These are some of the proposals that have been advanced over the years.[8]

The growing ability of neuroscientists to manipulate neurons using methods from molecular biology in combination with optical tools (e.g., Adamantidis et al. 2007) depends on the simultaneous development of appropriate behavioral assays and model organisms amenable to large-scale genomic analysis and manipulation. It is the combination of such fine-grained neuronal analysis in animals with ever more sensitive psychophysical and brain imaging techniques in humans, complemented by the development of a robust theoretical predictive framework, that will hopefully lead to a rational understanding of consciousness, one of the central mysteries of life.

Level of arousal and content of consciousness

There are two common but distinct dimensions of the term consciousness,[9] one involving arousal and states of consciousness and the other involving content of consciousness and conscious states. To be conscious of anything the brain must be in a relatively high state of arousal (sometimes called vigilance), whether in wakefulness or REM sleep, vividly experienced in dreams although usually not remembered. Brain arousal level fluctuates in a circadian rhythm but may be influenced by lack of sleep, drugs and alcohol, physical exertion, etc. Arousal can be measured behaviorally by the signal amplitude that triggers some criterion reaction (for instance, the sound level necessary to evoke an eye movement or a head turn toward the sound source). Clinicians use scoring systems such as the Glasgow Coma Scale to assess the level of arousal in patients.

High arousal states are associated with conscious states that have specific content, seeing, hearing, remembering, planning or fantasizing about something. Different levels or states of consciousness are associated with different kinds of conscious experiences. The "awake" state is quite different from the "dreaming" state (for instance, the latter has little or no self-reflection) and from the state of deep sleep. In all three cases the basic physiology of the brain is affected, as it also is in altered states of consciousness, for instance after taking drugs or during meditation when conscious perception and insight may be enhanced compared to the normal waking state.

Clinicians talk about impaired states of consciousness as in "the comatose state", "the persistent vegetative state" (PVS), and "the minimally conscious state" (MCS). Here, "state" refers to different "amounts" of external/physical consciousness, from a total absence in coma, persistent vegetative state and general anesthesia, to a fluctuating and limited form of conscious sensation in a minimally conscious state such as sleep walking or during a complex partial epileptic seizure.[10] The repertoire of conscious states or experiences accessible to a patient in a minimally conscious state is comparatively limited. In brain death there is no arousal, but it is unknown whether the subjectivity of experience has been interrupted, rather than its observable link with the organism. Functional neuroimaging have shown that parts of the cortex are still active in vegetative patients that are presumed to be unconscious;[11] however, these areas appear to be functionally disconnected from associative cortical areas whose activity is needed for awareness.

The potential richness of conscious experience appears to increase from deep sleep to drowsiness to full wakefulness, as might be quantified using notions from complexity theory that incorporate both the dimensionality as well as the granularity of conscious experience to give an integrated-information-theoretical account of consciousness.[12] As behavioral arousal increases so does the range and complexity of possible behavior. Yet in REM sleep there is a characteristic atonia, low motor arousal and the person is difficult to wake up, but there is still high metabolic and electric brain activity and vivid perception.

Many nuclei with distinct chemical signatures in the thalamus, midbrain and pons must function for a subject to be in a sufficient state of brain arousal to experience anything at all. These nuclei therefore belong to the enabling factors for consciousness. Conversely it is likely that the specific content of any particular conscious sensation is mediated by particular neurons in cortex and their associated satellite structures, including the amygdala, thalamus, claustrum and the basal ganglia.

The neuronal basis of perception

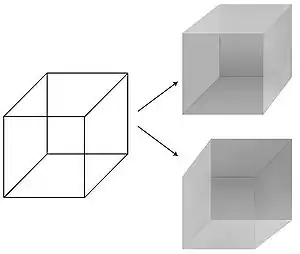

The possibility of precisely manipulating visual percepts in time and space has made vision a preferred modality in the quest for the NCC. Psychologists have perfected a number of techniques – masking, binocular rivalry, continuous flash suppression, motion induced blindness, change blindness, inattentional blindness – in which the seemingly simple and unambiguous relationship between a physical stimulus in the world and its associated percept in the privacy of the subject's mind is disrupted.[13] In particular a stimulus can be perceptually suppressed for seconds or even minutes at a time: the image is projected into one of the observer's eyes but is invisible, not seen. In this manner the neural mechanisms that respond to the subjective percept rather than the physical stimulus can be isolated, permitting visual consciousness to be tracked in the brain. In a perceptual illusion, the physical stimulus remains fixed while the percept fluctuates. The best known example is the Necker cube whose 12 lines can be perceived in one of two different ways in depth.

A perceptual illusion that can be precisely controlled is binocular rivalry. Here, a small image, e.g., a horizontal grating, is presented to the left eye, and another image, e.g., a vertical grating, is shown to the corresponding location in the right eye. In spite of the constant visual stimulus, observers consciously see the horizontal grating alternate every few seconds with the vertical one. The brain does not allow for the simultaneous perception of both images.

Logothetis and colleagues[15] recorded a variety of visual cortical areas in awake macaque monkeys performing a binocular rivalry task. Macaque monkeys can be trained to report whether they see the left or the right image. The distribution of the switching times and the way in which changing the contrast in one eye affects these leaves little doubt that monkeys and humans experience the same basic phenomenon. In the primary visual cortex (V1) only a small fraction of cells weakly modulated their response as a function of the percept of the monkey while most cells responded to one or the other retinal stimulus with little regard to what the animal perceived at the time. But in a high-level cortical area such as the inferior temporal cortex along the ventral stream almost all neurons responded only to the perceptually dominant stimulus, so that a "face" cell only fired when the animal indicated that it saw the face and not the pattern presented to the other eye. This implies that NCC involve neurons active in the inferior temporal cortex: it is likely that specific reciprocal actions of neurons in the inferior temporal and parts of the prefrontal cortex are necessary.

A number of fMRI experiments that have exploited binocular rivalry and related illusions to identify the hemodynamic activity underlying visual consciousness in humans demonstrate quite conclusively that activity in the upper stages of the ventral pathway (e.g., the fusiform face area and the parahippocampal place area) as well as in early regions, including V1 and the lateral geniculate nucleus (LGN), follow the percept and not the retinal stimulus.[16] Further, a number of fMRI[17][18] and DTI experiments[19] suggest V1 is necessary but not sufficient for visual consciousness.[20]

In a related perceptual phenomenon, flash suppression, the percept associated with an image projected into one eye is suppressed by flashing another image into the other eye while the original image remains. Its methodological advantage over binocular rivalry is that the timing of the perceptual transition is determined by an external trigger rather than by an internal event. The majority of cells in the inferior temporal cortex and the superior temporal sulcus of monkeys trained to report their percept during flash suppression follow the animal's percept: when the cell's preferred stimulus is perceived, the cell responds. If the picture is still present on the retina but is perceptually suppressed, the cell falls silent, even though primary visual cortex neurons fire.[21][22] Single-neuron recordings in the medial temporal lobe of epilepsy patients during flash suppression likewise demonstrate abolishment of response when the preferred stimulus is present but perceptually masked.[23]

Global disorders of consciousness

Given the absence of any accepted criterion of the minimal neuronal correlates necessary for consciousness, the distinction between a persistently vegetative patient who shows regular sleep-wave transitions and may be able to move or smile, and a minimally conscious patient who can communicate (on occasion) in a meaningful manner (for instance, by differential eye movements) and who shows some signs of consciousness, is often difficult. In global anesthesia the patient should not experience psychological trauma but the level of arousal should be compatible with clinical exigencies.

Blood-oxygen-level-dependent fMRI have demonstrated normal patterns of brain activity in a patient in a vegetative state following a severe traumatic brain injury when asked to imagine playing tennis or visiting rooms in his/her house.[25] Differential brain imaging of patients with such global disturbances of consciousness (including akinetic mutism) reveal that dysfunction in a widespread cortical network including medial and lateral prefrontal and parietal associative areas is associated with a global loss of awareness.[26] Impaired consciousness in epileptic seizures of the temporal lobe was likewise accompanied by a decrease in cerebral blood flow in frontal and parietal association cortex and an increase in midline structures such as the mediodorsal thalamus.[27]

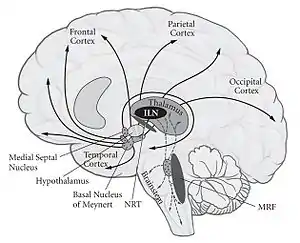

Relatively local bilateral injuries to midline (paramedian) subcortical structures can also cause a complete loss of awareness.[28] These structures therefore enable and control brain arousal (as determined by metabolic or electrical activity) and are necessary neural correlates. One such example is the heterogeneous collection of more than two dozen nuclei on each side of the upper brainstem (pons, midbrain and in the posterior hypothalamus), collectively referred to as the reticular activating system (RAS). Their axons project widely throughout the brain. These nuclei – three-dimensional collections of neurons with their own cyto-architecture and neurochemical identity – release distinct neuromodulators such as acetylcholine, noradrenaline/norepinephrine, serotonin, histamine and orexin/hypocretin to control the excitability of the thalamus and forebrain, mediating alternation between wakefulness and sleep as well as general level of behavioral and brain arousal. After such trauma, however, eventually the excitability of the thalamus and forebrain can recover and consciousness can return.[29] Another enabling factor for consciousness are the five or more intralaminar nuclei (ILN) of the thalamus. These receive input from many brainstem nuclei and project strongly, directly to the basal ganglia and, in a more distributed manner, into layer I of much of the neocortex. Comparatively small (1 cm3 or less) bilateral lesions in the thalamic ILN completely knock out all awareness.[30]

Forward versus feedback projections

Many actions in response to sensory inputs are rapid, transient, stereotyped, and unconscious.[31] They could be thought of as cortical reflexes and are characterized by rapid and somewhat stereotyped responses that can take the form of rather complex automated behavior as seen, e.g., in complex partial epileptic seizures. These automated responses, sometimes called zombie behaviors,[32] could be contrasted by a slower, all-purpose conscious mode that deals more slowly with broader, less stereotyped aspects of the sensory inputs (or a reflection of these, as in imagery) and takes time to decide on appropriate thoughts and responses. Without such a consciousness mode, a vast number of different zombie modes would be required to react to unusual events.

A feature that distinguishes humans from most animals is that we are not born with an extensive repertoire of behavioral programs that would enable us to survive on our own ("physiological prematurity"). To compensate for this, we have an unmatched ability to learn, i.e., to consciously acquire such programs by imitation or exploration. Once consciously acquired and sufficiently exercised, these programs can become automated to the extent that their execution happens beyond the realms of our awareness. Take, as an example, the incredible fine motor skills exerted in playing a Beethoven piano sonata or the sensorimotor coordination required to ride a motorcycle along a curvy mountain road. Such complex behaviors are possible only because a sufficient number of the subprograms involved can be executed with minimal or even suspended conscious control. In fact, the conscious system may actually interfere somewhat with these automated programs.[33]

From an evolutionary standpoint it clearly makes sense to have both automated behavioral programs that can be executed rapidly in a stereotyped and automated manner, and a slightly slower system that allows time for thinking and planning more complex behavior. This latter aspect may be one of the principal functions of consciousness. Other philosophers, however, have suggested that consciousness would not be necessary for any functional advantage in evolutionary processes.[34][35] No one has given a causal explanation, they argue, of why it would not be possible for a functionally equivalent non-conscious organism (i.e., a philosophical zombie) to achieve the very same survival advantages as a conscious organism. If evolutionary processes are blind to the difference between function F being performed by conscious organism O and non-conscious organism O*, it is unclear what adaptive advantage consciousness could provide.[36] As a result, an exaptive explanation of consciousness has gained favor with some theorists that posit consciousness did not evolve as an adaptation but was an exaptation arising as a consequence of other developments such as increases in brain size or cortical rearrangement.[37] Consciousness in this sense has been compared to the blind spot in the retina where it is not an adaption of the retina, but instead just a by-product of the way the retinal axons were wired.[38] Several scholars including Pinker, Chomsky, Edelman, and Luria have indicated the importance of the emergence of human language as an important regulative mechanism of learning and memory in the context of the development of higher-order consciousness.

It seems possible that visual zombie modes in the cortex mainly use the dorsal stream in the parietal region.[31] However, parietal activity can affect consciousness by producing attentional effects on the ventral stream, at least under some circumstances. The conscious mode for vision depends largely on the early visual areas (beyond V1) and especially on the ventral stream.

Seemingly complex visual processing (such as detecting animals in natural, cluttered scenes) can be accomplished by the human cortex within 130–150 ms,[39][40] far too brief for eye movements and conscious perception to occur. Furthermore, reflexes such as the oculovestibular reflex take place at even more rapid time-scales. It is quite plausible that such behaviors are mediated by a purely feed-forward moving wave of spiking activity that passes from the retina through V1, into V4, IT and prefrontal cortex, until it affects motorneurons in the spinal cord that control the finger press (as in a typical laboratory experiment). The hypothesis that the basic processing of information is feedforward is supported most directly by the short times (approx. 100 ms) required for a selective response to appear in IT cells.

Conversely, conscious perception is believed to require more sustained, reverberatory neural activity, most likely via global feedback from frontal regions of neocortex back to sensory cortical areas[20] that builds up over time until it exceeds a critical threshold. At this point, the sustained neural activity rapidly propagates to parietal, prefrontal and anterior cingulate cortical regions, thalamus, claustrum and related structures that support short-term memory, multi-modality integration, planning, speech, and other processes intimately related to consciousness. Competition prevents more than one or a very small number of percepts to be simultaneously and actively represented. This is the core hypothesis of the global workspace theory of consciousness.[41][42]

In brief, while rapid but transient neural activity in the thalamo-cortical system can mediate complex behavior without conscious sensation, it is surmised that consciousness requires sustained but well-organized neural activity dependent on long-range cortico-cortical feedback.

History

The neurobiologist Christfried Jakob (1866-1956) argued that the only conditions which must have neural correlates are direct sensations and reactions; these are called "intonations".

Neurophysiological studies in animals provided some insights on the neural correlates of conscious behavior. Vernon Mountcastle, in the early 1960s, set up to study this set of problems, which he termed "the Mind/Brain problem", by studying the neural basis of perception in the somatic sensory system. His labs at Johns Hopkins were among the first, along with Edward V.Evarts at NIH, to record neural activity from behaving monkeys. Struck with the elegance of SS Stevens approach of magnitude estimation, Mountcastle's group discovered three different modalities of somatic sensation shared one cognitive attribute: in all cases the firing rate of peripheral neurons was linearly related to the strength of the percept elicited. More recently, Ken H. Britten, William T. Newsome, and C. Daniel Salzman have shown that in area MT of monkeys, neurons respond with variability that suggests they are the basis of decision making about direction of motion. They first showed that neuronal rates are predictive of decisions using signal detection theory, and then that stimulation of these neurons could predictably bias the decision. Such studies were followed by Ranulfo Romo in the somatic sensory system, to confirm, using a different percept and brain area, that a small number of neurons in one brain area underlie perceptual decisions.

Other lab groups have followed Mountcastle's seminal work relating cognitive variables to neuronal activity with more complex cognitive tasks. Although monkeys cannot talk about their perceptions, behavioral tasks have been created in which animals made nonverbal reports, for example by producing hand movements. Many of these studies employ perceptual illusions as a way to dissociate sensations (i.e., the sensory information that the brain receives) from perceptions (i.e., how the consciousness interprets them). Neuronal patterns that represent perceptions rather than merely sensory input are interpreted as reflecting the neuronal correlate of consciousness.

Using such design, Nikos Logothetis and colleagues discovered perception-reflecting neurons in the temporal lobe. They created an experimental situation in which conflicting images were presented to different eyes (i.e., binocular rivalry). Under such conditions, human subjects report bistable percepts: they perceive alternatively one or the other image. Logothetis and colleagues trained the monkeys to report with their arm movements which image they perceived. Temporal lobe neurons in Logothetis experiments often reflected what the monkeys' perceived. Neurons with such properties were less frequently observed in the primary visual cortex that corresponds to relatively early stages of visual processing. Another set of experiments using binocular rivalry in humans showed that certain layers of the cortex can be excluded as candidates of the neural correlate of consciousness. Logothetis and colleagues switched the images between eyes during the percept of one of the images. Surprisingly the percept stayed stable. This means that the conscious percept stayed stable and at the same time the primary input to layer 4, which is the input layer, in the visual cortex changed. Therefore layer 4 can not be a part of the neural correlate of consciousness. Mikhail Lebedev and their colleagues observed a similar phenomenon in monkey prefrontal cortex. In their experiments monkeys reported the perceived direction of visual stimulus movement (which could be an illusion) by making eye movements. Some prefrontal cortex neurons represented actual and some represented perceived displacements of the stimulus. Observation of perception related neurons in prefrontal cortex is consistent with the theory of Christof Koch and Francis Crick who postulated that neural correlate of consciousness resides in prefrontal cortex. Proponents of distributed neuronal processing may likely dispute the view that consciousness has a precise localization in the brain.

Francis Crick wrote a popular book, "The Astonishing Hypothesis," whose thesis is that the neural correlate for consciousness lies in our nerve cells and their associated molecules. Crick and his collaborator Christof Koch[43] have sought to avoid philosophical debates that are associated with the study of consciousness, by emphasizing the search for "correlation" and not "causation".

There is much room for disagreement about the nature of this correlate (e.g., does it require synchronous spikes of neurons in different regions of the brain? Is the co-activation of frontal or parietal areas necessary?). The philosopher David Chalmers maintains that a neural correlate of consciousness, unlike other correlates such as for memory, will fail to offer a satisfactory explanation of the phenomenon; he calls this the hard problem of consciousness.[44][45]

See also

- Animal consciousness

- Artificial consciousness

- Bridge locus

- Cognitive map

- Conceptual space

- Global workspace theory

- Hard problem of consciousness

- Higher-order theories of consciousness

- Image schema

- Information-theoretic death

- Integrated information theory

- LIDA (cognitive architecture)

- Models of neural computation

- Multiple drafts model

- Münchhausen trilemma

- Neural coding

- Neural decoding

- Neural substrate

- Philosophy of mind

- Quantum cognition

- Quantum mind

Notes

- ↑ Koch 2004, Figure 1.1 The Neuronal Correlates of Consciousness p. 16.

- ↑ Koch 2004, p. 304.

- ↑ See here Archived 2013-03-13 at the Wayback Machine for a glossary of related terms.

- ↑ Chalmers, David J. (June 1998), "What is a neural correlate of consciousness?", in Metzinger, Thomas (ed.), Neural Correlates of Consciousness:Empirical and Conceptual Questions, MIT Press (published September 2000), ISBN 978-0-262-13370-8

- ↑ Squire 2008, p. 1223.

- ↑ Kandel 2007, p. 382.

- ↑ Schwartz, Jeffrey M.; Stapp, Henry P.; Beauregard, Mario. "Quantum physics in neuroscience and psychology: A neurophysiological model of mind/brain interaction" (PDF).

- ↑ See Chalmers 1998, available online.

- ↑ Zeman 2001

- ↑ Schiff 2004

- ↑ Laureys, Trends Cogn Sci, 2005, 9:556-559

- ↑ Tononi et al. 2016

- ↑ Kim and Blake 2004

- ↑ Koch 2004, Figure 16.1 The Bistable Necker Cube, p. 270.

- ↑ Logothetis 1998

- ↑ Rees and Frith 2007

- ↑ Haynes and Rees 2005

- ↑ Lee et al. 2007

- ↑ Shimono and Niki 2013

- 1 2 Crick and Koch 1995

- ↑ Leopold and Logothetis 1996

- ↑ Sheinberg and Logothetis 1997

- ↑ Kreiman et al. 2002

- ↑ Koch 2004, Figure 5.1 The Cholinergic Enabling System p. 92. See Chapter 5, available on line.

- ↑ Owen et al. 2006

- ↑ Laureys 2005

- ↑ Blumenfeld et al. 2004

- ↑ Koch 2004, p. 92

- ↑ Villablanca 2004

- ↑ Bogen 1995

- 1 2 Milner and Goodale 1995

- ↑ Koch and Crick 2001

- ↑ Beilock et al. 2002

- ↑ Flanagan, Owen; Polger, Tom W. (1995). "Zombies and the function of consciousness". Journal of Consciousness Studies. 2: 313–321.

- ↑ Rosenthal, David (2008). "Consciousness and its function". Neuropsychologia. 46 (3): 829–840. doi:10.1016/j.neuropsychologia.2007.11.012. PMID 18164042. S2CID 7791431.

- ↑ Harnad, Stevan (2002). "Turing indistinguishability and the Blind Watchmaker". In Fetzer, James H. (ed.). Consciousness Evolving. John Benjamins. Retrieved 2011-10-26.

- ↑ Feinberg, T.E.; Mallatt, J. (2013). "The evolutionary and genetic origins of consciousness in the Cambrian Period over 500 million years ago". Front Psychol. 4: 667. doi:10.3389/fpsyg.2013.00667. PMC 3790330. PMID 24109460.

- ↑ Robinson, Zack; Maley, Corey J.; Piccinini, Gualtiero (2015). "Is Consciousness a Spandrel?". Journal of the American Philosophical Association. 1 (2): 365–383. doi:10.1017/apa.2014.10. S2CID 170892645.

- ↑ Thorpe et al. 1996

- ↑ VanRullen and Koch 2003

- ↑ Baars 1988

- ↑ Dehaene et al. 2003

- ↑ Koch, Christof (2004). The quest for consciousness: a neurobiological approach. Englewood, US-CO: Roberts & Company Publishers. ISBN 978-0-9747077-0-9.

- ↑ See Cooney's foreword to the reprint of Chalmers' paper: Brian Cooney, ed. (1999). "Chapter 27: Facing up to the problem of consciousness". The place of mind. Cengage Learning. pp. 382 ff. ISBN 978-0534528256.

- ↑ Chalmers, David (1995). "Facing up to the problem of consciousness". Journal of Consciousness Studies. 2 (3): 200–219. See also this link

References

- Adamantidis A.R., Zhang F., Aravanis A.M., Deisseroth K. and de Lecea L. (2007) Neural substrates of awakening probed with optogenetic control of hypocretin neurons. Nature. advanced online publication.

- Baars B.J. (1988) A cognitive theory of consciousness. Cambridge University Press: New York, NY.

- Sian Beilock, Carr T.H., MacMahon C. and Starkes J.L. (2002) When paying attention becomes counterproductive: impact of divided versus skill-focused attention on novice and experienced performance of sensorimotor skills. J. Exp. Psychol. Appl. 8: 6–16.

- Blumenfeld H., McNally K.A., Vanderhill S.D., Paige A.L., Chung R., Davis K., Norden A.D., Stokking R., Studholme C., Novotny E.J. Jr., Zubal I.G. and Spencer S.S. (2004) Positive and negative network correlations in temporal lobe epilepsy. Cereb. Cort. 14: 892–902.

- Bogen J.E. (1995) On the neurophysiology of consciousness: I. An Overview. Consciousness and Cognition 4: 52–62.

- Chalmers, David J. (June 1998), "What is a neural correlate of consciousness?", in Metzinger, Thomas (ed.), Neural Correlates of Consciousness:Empirical and Conceptual Questions, MIT Press (published September 2000), ISBN 978-0-262-13370-8

- Crick F. and Koch C. (1990) Towards a neurobiological theory of consciousness. Seminars in Neuroscience Vol2, 263–275.

- Crick F.C. and Koch C. (1995) Are we aware of neural activity in primary visual cortex? Nature 375: 121–3.

- Dehaene S., Sergent C. and Changeux J.P. (2003) A neuronal network model linking subjective reports and objective physiological data during conscious perception. Proc. Natl. Acad. Sci. USA 100: 8520–5.

- Haynes J.D. and Rees G. (2005) Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nat. Neurosci. 8: 686–91.

- Kandel Eric R. (2007). In search of memory: The emergence of a new science of mind. W. W. Norton & Company. ISBN 978-0393329377.

- Kim C-Y and Blake R. (2004) Psychophysical magic: Rendering the visible 'invisible'. Trends Cogn. Sci. 9: 381–8.

- Koch, Christof (2004). The quest for consciousness: a neurobiological approach. Englewood, US-CO: Roberts & Company Publishers. ISBN 978-0-9747077-0-9.

- Koch C. and Crick F.C. (2001) On the zombie within. Nature 411: 893.

- Kreiman G., Fried I. and Koch C. (2002) Single-neuron correlates of subjective vision in the human medial temporal lobe. Proc Natl. Acad. Sci. USA 99: 8378–83.

- Laureys S. (2005) The neural correlate of (un)awareness: Lessons from the vegetative state. Trends Cogn. Sci. 9: 556–9.

- Lee S.H., Blake R. and Heeger D.J. (2007) Hierarchy of cortical responses underlying binocular rivalry. Nat. Neurosci. 10: 1048–54.

- Leopold D.A. and Logothetis N.K. (1996) Activity changes in early visual cortex reflects monkeys' percepts during binocular rivalry. Nature 379: 549–53.

- Logothetis N. (1998) Single units and conscious vision. Philos. Trans. R. Soc. Lond. B, 353: 1801–18.

- Milner A.D. and Goodale M.A. (1995) The visual brain in action. Oxford University Press, Oxford, UK.

- Owen A.M., Cleman M.R., Boly M., Davis M.H., Laureys S. and Pickard J.D. (2006) Detecting awareness in the vegetative state. Science 313: 1402.

- Rees G. and Frith C. (2007) Methodologies for identifying the neural correlates of consciousness. In: The Blackwell Companion to Consciousness. Velmans M and Schneider S, eds., pp. 553–66. Blackwell: Oxford, UK.

- Sheinberg D.L. and Logothetis N.K. (1997) The role of temporal cortical areas in perceptual organization. Proc. Natl. Acad. Sci. USA 94: 3408–13.

- Schiff, Nicholas D. (November 2004), "The neurology of impaired consciousness: Challenges for cognitive neuroscience.", in Gazzaniga, Michael S. (ed.), The Cognitive Neurosciences (3rd ed.), MIT Press, ISBN 978-0-262-07254-0

- Shimono M. and Niki K. (2013) Global Mapping of the Whole-Brain Network Underlining Binocular Rivalry. Brain connectivity 3: 212-221.

- Thorpe S., Fize D. and Marlot C. (1996) Speed of processing in the human visual system. Nature 381: 520–2.

- Squire, Larry R. (2008). Fundamental neuroscience (3rd ed.). Academic Press. p. 1256. ISBN 978-0-12-374019-9.

- Tononi G. (2004) An information integration theory of consciousness. BMC Neuroscience. 5: 42–72.

- Tononi, Giulio and Boly, Melanie and Massimini, Marcello and Koch, Christof (2016). "Integrated information theory: from consciousness to its physical substrate". Nature Reviews Neuroscience. Nature Publishing Group. 17 (5): 450–461. doi:10.1038/nrn.2016.44. PMID 27225071. S2CID 21347087.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - VanRullen R. and Koch C. (2003) Visual selective behavior can be triggered by a feed-forward process. J. Cogn. Neurosci. 15: 209–17.

- Villablanca J.R. (2004) Counterpointing the functional role of the forebrain and of the brainstem in the control of the sleep-waking system. J. Sleep Res. 13: 179–208.

- Zeman A. (2001) Consciousness. Brain. 7: 1263-1289.

Further reading

- Atkinson, A., et al. "Consciousness: Mapping the theoretical landscape"

- Chalmers, D (1995). The Conscious Mind: In Search of a Fundamental Theory. Philosophy of Mind. Oxford: Oxford University Press. ISBN 9780195117899.

- Crick, Francis (1994). The astonishing hypothesis: the scientific search for the soul. Macmillan Reference USA. ISBN 978-0-684-19431-8.

- Dawkins, MS (1993). Through our eyes only? The Search for Animal Consciousness. Oxford: Oxford University Press. ISBN 9780198503200.

- Edelman, GM; Tononi, G (2000). Consciousness: How Matter becomes Imagination. New York: Basic Books. ISBN 9780465013777.

- Goodale, MA; Milner, AD (2004). Sight Unseen: An Exploration of Conscious and Unconscious Vision. Oxford: Oxford University Press. ISBN 978-0-19-856807-0.

- Koch C. and Hepp K. (2006) Quantum mechanics and higher brain functions: Lessons from quantum computation and neurobiology. Nature 440: 611–2. (Freely available from http://www.theswartzfoundation.org/papers/caltech/koch-hepp-07-final.pdf (2007))

- Logothetis, N. K.; Guggenberger, Heinz; Peled, Sharon; Pauls, Jon (1999). "Functional imaging of the monkey brain". Nature Neuroscience. 2 (6): 555–562. doi:10.1038/9210. PMID 10448221. S2CID 21627829.

- Schall, J. "On Building a Bridge Between Brain and Behavior." Annual Reviews in Psychology. Vol 55. Feb 2004. pp 23–50.

- Vaas, Ruediger (1999): "Why Neural Correlates Of Consciousness Are Fine, But Not Enough". Anthropology & Philosophy Vol. 3, pp. 121–141. https://web.archive.org/web/20120205025719/http://www.swif.uniba.it/lei/mind/texts/t0000009.htm

External links

| Wikibooks has a book on the topic of: Consciousness studies |