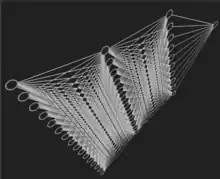

Double descent

In statistics and machine learning, double descent is the phenomenon where a statistical model with a small number of parameters and a model with an extremely large number of parameters have a small error, but a model whose number of parameters is about the same as the number of data points used to train the model will have a large error.[2] It was discovered around 2018 when researchers were trying to reconcile the bias-variance tradeoff in classical statistics, which states that having too many parameters will yield an extremely large error, with the 2010s empirical observation of machine learning practitioners that the larger models are, the better they work.[3][4] The scaling behavior of double descent has been found to follow a broken neural scaling law[5] functional form.

.png.webp)

References

- Schaeffer, Rylan; Khona, Mikail; Robertson, Zachary; Boopathy, Akhilan; Pistunova, Kateryna; Rocks, Jason W.; Fiete, Ila Rani; Koyejo, Oluwasanmi (2023-03-24). "Double Descent Demystified: Identifying, Interpreting & Ablating the Sources of a Deep Learning Puzzle". arXiv:2303.14151v1 [cs.LG].

- "Deep Double Descent". OpenAI. 2019-12-05. Retrieved 2022-08-12.

- evhub (2019-12-05). "Understanding "Deep Double Descent"". LessWrong.

- Belkin, Mikhail; Hsu, Daniel; Ma, Siyuan; Mandal, Soumik (2019-08-06). "Reconciling modern machine learning practice and the bias-variance trade-off". Proceedings of the National Academy of Sciences. 116 (32): 15849–15854. arXiv:1812.11118. doi:10.1073/pnas.1903070116. ISSN 0027-8424. PMC 6689936. PMID 31341078.

- Caballero, Ethan; Gupta, Kshitij; Rish, Irina; Krueger, David (2022). "Broken Neural Scaling Laws". International Conference on Learning Representations (ICLR), 2023.

| Part of a series on |

| Machine learning and data mining |

|---|

|

Further reading

- Mikhail Belkin; Daniel Hsu; Ji Xu (2020). "Two Models of Double Descent for Weak Features". SIAM Journal on Mathematics of Data Science. 2 (4): 1167–1180. doi:10.1137/20M1336072.

- Preetum Nakkiran; Gal Kaplun; Yamini Bansal; Tristan Yang; Boaz Barak; Ilya Sutskever (29 December 2021). "Deep double descent: where bigger models and more data hurt". Journal of Statistical Mechanics: Theory and Experiment. IOP Publishing Ltd and SISSA Medialab srl. 2021 (12): 124003. arXiv:1912.02292. Bibcode:2021JSMTE2021l4003N. doi:10.1088/1742-5468/ac3a74. S2CID 207808916.

- Song Mei; Andrea Montanari (April 2022). "The Generalization Error of Random Features Regression: Precise Asymptotics and the Double Descent Curve". Communications on Pure and Applied Mathematics. 75 (4): 667–766. arXiv:1908.05355. doi:10.1002/cpa.22008. S2CID 199668852.

- Xiangyu Chang; Yingcong Li; Samet Oymak; Christos Thrampoulidis (2021). "Provable Benefits of Overparameterization in Model Compression: From Double Descent to Pruning Neural Networks". Proceedings of the AAAI Conference on Artificial Intelligence. 35 (8). arXiv:2012.08749.

- Marco Loog; Tom Viering; Alexander Mey; Jesse H. Krijthe; David M. J. Tax (2020). "A brief prehistory of double descent". Proceedings of the National Academy of Sciences of the United States of America. 117 (16): 10625–10626. arXiv:2004.04328. Bibcode:2020PNAS..11710625L. doi:10.1073/pnas.2001875117. PMC 7245109. PMID 32371495.

External links

- Brent Werness; Jared Wilber. "Double Descent: Part 1: A Visual Introduction".

- Brent Werness; Jared Wilber. "Double Descent: Part 2: A Mathematical Explanation".