Rosenbrock methods

Rosenbrock methods refers to either of two distinct ideas in numerical computation, both named for Howard H. Rosenbrock.

Numerical solution of differential equations

Rosenbrock methods for stiff differential equations are a family of single-step methods for solving ordinary differential equations.[1][2] They are related to the implicit Runge–Kutta methods[3] and are also known as Kaps–Rentrop methods.[4]

Search method

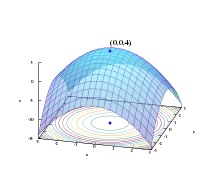

Rosenbrock search is a numerical optimization algorithm applicable to optimization problems in which the objective function is inexpensive to compute and the derivative either does not exist or cannot be computed efficiently.[5] The idea of Rosenbrock search is also used to initialize some root-finding routines, such as fzero (based on Brent's method) in Matlab. Rosenbrock search is a form of derivative-free search but may perform better on functions with sharp ridges.[6] The method often identifies such a ridge which, in many applications, leads to a solution.[7]

References

- H. H. Rosenbrock, "Some general implicit processes for the numerical solution of differential equations", The Computer Journal (1963) 5(4): 329-330

- Press, WH; Teukolsky, SA; Vetterling, WT; Flannery, BP (2007). "Section 17.5.1. Rosenbrock Methods". Numerical Recipes: The Art of Scientific Computing (3rd ed.). New York: Cambridge University Press. ISBN 978-0-521-88068-8.

- "Archived copy" (PDF). Archived from the original (PDF) on 2013-10-29. Retrieved 2013-05-16.

{{cite web}}: CS1 maint: archived copy as title (link) - "Rosenbrock Methods".

- H. H. Rosenbrock, "An Automatic Method for Finding the Greatest or Least Value of a Function", The Computer Journal (1960) 3(3): 175-184

- Leader, Jeffery J. (2004). Numerical Analysis and Scientific Computation. Addison Wesley. ISBN 0-201-73499-0.

- Shoup, T., Mistree, F., Optimization methods: with applications for personal computers, 1987, Prentice Hall, pg. 120