Soft privacy technologies

Soft privacy technologies fall under the category of PETs, Privacy-enhancing technologies, as methods of protecting data. Soft privacy is a counterpart to another subcategory of PETs, called hard privacy. Soft privacy technology has the goal of keeping information safe, allowing services to process data while having full control of how data is being used. To accomplish this, soft privacy emphasizes the use of third-party programs to protect privacy, emphasizing auditing, certification, consent, access control, encryption, and differential privacy.[1] Since evolving technologies like the internet, machine learning, and big data are being applied to many long-standing fields, we now need to process billions of datapoints every day in areas such as health care, autonomous cars, smart cards, social media, and more. Many of these fields rely on soft privacy technologies when they handle data.

Applications

Health care

Some medical devices like Ambient Assisted Living monitor and report sensitive information remotely into a cloud.[2] Cloud computing offers a solution that meets the healthcare need for processing and storage at an affordable price.[2] Together, this system is used to monitor a patient's biometric conditions remotely, connecting with smart technology when necessary. In addition to monitoring, the devices can also send a mobile notification when certain conditions pass a set point such as a major change in blood pressure. Due to the nature of these devices, which report data constantly and use smart technology,[3] this type of medical technology is subject to a lot of privacy concerns. Soft privacy is thus relevant for the third-party cloud service, as many privacy concerns center there, including risk in unauthorized access, data leakage, sensitive information disclosure, and privacy disclosure.[4]

One solution proposed for privacy issues around cloud computing in health care is through the use of Access control, by giving partial access to data based on a user's role: such as a doctor, family, etc. Another solution, applicable for wireless technology that moves data to a cloud, is through the usage of Differential privacy.[5] The differential privacy system typically encrypts the data, sends it to a trusted service, then opens up access to it for hospital institutions. A strategy that is often used to prevent data leakage and attacks works by adding ‘noise’ into the data which changes its values slightly. The real underlying information can be accessed through security questions. A study by Sensors concluded that differential privacy techniques involving additional noise helped achieve mathematically-precise guaranteed privacy.[5]

Adding noise to data by default can prevent devastating privacy breaches. In the mid-90's the Commonwealth of Massachusetts Group Insurance Commission released anonymous health records while hiding some sensitive information such as addresses and phone numbers. Despite this attempt to hiding personal information while providing a useful database, privacy was still breached–by correlating the health databases with public voting databases, individuals' hidden data could be rediscovered. Without the differential privacy techniques of encrypting data and adding noise, it is possible to link data that may not seem related, together.[6]

Autonomous cars

Autonomous cars raise concerns about location tracking, because they are sensor-based vehicles. To achieve full autonomy, these cars would need access to a massive database with information on the surroundings, paths to take, interaction with others, weather, and many more circumstances that need to be accounted for. This leads to privacy and security questions: how the data will all be stored, who is it shared with, and what type of data is being stored. A lot of this data is potentially sensitive and could be used maliciously if it was leaked or hacked. Additionally, there are concerns over this data being sold to companies, as the data can help predict products the consumer would like or need. This could be undesirable as data here may expose health conditions and alert some companies to advertise to said customers with location-based spam or products.[7]

In terms of the legal aspect of this technology, there are rules and regulations governing some parts of these cars, but for other areas, laws are left unwritten or oftentimes outdated.[8] Many of the current laws are vague, leaving the rules to be open to interpretation. For example, there are federal laws dating back many years ago governing computer privacy, which were extended to cover phones when they arose, and now are being extended again to apply to the “computer” inside most driverless cars.[8] Another legal concern surrounds government surveillance: can governments gain access to driverless car data, giving them the opportunity for mass surveillance or tracking with no warrants? Finally, companies may try to use this data to improve their technology and target their marketing data to fit the needs of their consumers. In response to this controversy, automakers pre-empted government action on driverless cars with the Automotive Information Sharing and Analysis Center (Auto-ISAC) in August 2015[9] to establish protocols for cybersecurity and decide how to handle vehicular communication systems in a safe manner through with other autonomous cars.

Vehicle-to-grid, known as V2G, plays a big role in the energy consumption, cost, and effectiveness of smart grids.[10] This system is what electric vehicles use to charge and discharge, as they communicate with the power grid to fill up the appropriate amount. Although this ability is extremely important for electric vehicles, it does open up privacy issues surrounding the visibility of the car's location. For example, the driver's home address, place of work, place of entertainment, and record of frequency may be reflected in the car's charging history. With this information, which could potentially be leaked, there are a wide variety of security breaches that can occur. For example, someone’s health could be deduced by the car's number of hospital visits, or based on a user's parking patterns, they may receive location-based spam, or a thief could benefit from knowing their target's home address and work schedule. Like with handling sensitive health data, a possible solution here is to use differential privacy to add noise to the mathematical data so leaked information may not be as accurate.

Cloud storage

Using the cloud is important for consumers and businesses, as it provides a cheap source of virtually unlimited storage space. One challenge cloud storage has faced was search functions. Typical cloud computing design would encrypt every word before it enters the cloud. If a user wants to search by keyword for a specific file stored in the cloud, the encryption hinders an easy and fast method of search. You can't scan the encrypted data for your search term anymore, because the keywords are all encrypted. So encryption ends up being a double-edged sword: it protects privacy but introduces new, inconvenient problems.[11] One performance solution lies in modifying the search method, so that documents are indexed entirely, rather than just their keywords. The search method can also be changed by searching with a term that gets encrypted, so that it can be matched to encrypted keywords without de-encrypting any data. In this case, privacy is achieved and it is easy to search for words that match with the encrypted files. However, there is a new issue that arises from this, as it takes a longer time to read and match through an encrypted file and decrypt the whole thing for a user.[11]

Mobile data

Police and other authorities also benefit from reading personal information from mobile data, which can be useful during investigations.[12] Privacy infringements and potential surveillance in these cases has often been a concern in the United States, and several cases have reached the Supreme Court. In some instances, authorities used GPS data to track down suspects' locations, and monitored data over long periods of time. This practice is now limited because of the Supreme Court case Riley v. California. There it was unanimously decided to prevent warrantless searches of mobile data.

Mobile privacy has also been an issue in the realm of spam calls. In the effort to reduce these calls, many people are being taken advantage of by apps that promise to help block spam. The problem with these apps is that many are known to collect personal phone data, including callers, phone honeypot call detail records (CDRs), and call recordings. Although some of this information is necessary for creating blacklists of spam, not all of it is, but these apps don't always prioritize privacy in their collection of data. As these are typically small-scaled apps with varying budget degrees, differential privacy is not always top of mind, and differential privacy, rather than ignoring privacy concerns, is often more expensive. This is because the dataset needed to construct a good algorithm that achieves local differential privacy is much larger than a basic dataset.[13]

Danger of using VPNs

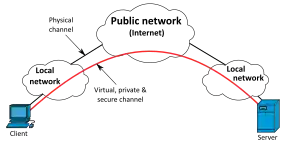

VPNs are used to create a remote user and a home network and encrypt their package usage. This allows the user to have their own private network while on the internet. However, this encrypted tunnel must trust a third party to protect the privacy of the user, since it acts as a virtual leased line over the shared public infrastructure of the internet. Additionally, VPNs have a difficult time when it comes to mobile applications, because the cell network may be constantly changing and can even break, thus endangering the privacy that the VPN gives from its encryption.[14]

VPNs are susceptible to attackers that fabricate, intercept, modify, and interrupt traffic. They become a target, because sensitive information is often being transmitted over these networks. Quick VPNs generally provide faster tunnel establishment and less overhead, but they downgrade the effectiveness of VPNs as a security protocol. One mitigation centers around the simple practice of changing long usernames (IP addresses) and passwords frequently, which is important to achieving security and protection from malicious attacks.[15]

Smart cards

Newer smart cards are a developing technology used to authorize users for certain resources.[16] Using biometrics such as fingerprints, iris images, voice patterns, or even DNA sequences as access control for sensitive information such as a passport can help ensure privacy. Biometrics are important because they are basically unchangeable and can be used as the access code to one’s information, in some cases granting access to virtually any data about the particular person. Currently they are being used for telecommunications, e-commerce, banking applications, government applications, healthcare, transportation, and more.

Danger of using biometrics

Biometrics contain unique characteristic details about a person. If they are leaked, it would be fairly easy to trace the endangered user. This poses a great danger, because biometrics are based on features that rarely change, like a user's fingerprint, and many sensitive applications use biometrics. There are some possible solutions to this:

Anonymous Biometric Access Control System (ABAC): This method authenticates valid users into the system without knowing who individuals are. For example, a hotel should be able to admit a VIP guest member into a VIP room without knowing any details about that person, even though the verification process is still utilizing biometrics. One way to do this was developed by the Center for Visualization and Virtual Environment. They designed an algorithm that uses techniques like hamming distance computation, bit extraction, comparison, and result aggregation, all implemented with a homomorphic cipher, to allow a biometric server to confirm a user without knowing their identity. This is done by taking the biometric saved and encrypting it. While saving the biometrics, there are specific processes to de-identify features such as facial recognition when encrypting the data so even if it was leaked, there would be no danger of tracing someone's identity.[17]

Online videos

Online learning through videos has gotten very popular. One of the biggest challenges for privacy in this field is the practice of video prefetching. When a user selects an online video, rather than making them wait for it to slowly load, prefetching helps the user save time by loading part of the video before the user has even started watching. It seems to be a perfect and necessary solution as we stream more content, but prefetching faces privacy concerns because it heavily relies on prediction. For an accurate prediction to happen, it is necessary to access a user's view history and preferences. Otherwise, prefetching will be more of a waste to bandwidth than a benefit. After learning the user’s viewing history and opinions on popular content, it is easier to predict the next video to prefetch, but this data is valuable and possibly sensitive.[18] There has been research on applying differential privacy to video prefetching, to improve user privacy.[18]

Third party certification

E-commerce has been growing rapidly, so there have been initiatives to reduce consumer perceptions of risk while shopping online. Firms have found ways to gain trust from new consumers through the use of seals and certifications off of third-party platforms. A study done by Electronic Commerce Research found that payment providers can reduce perceptions of risk from consumers by having third-party logos and seals on the checkout page to enhance visitor conversion.[19] These logos and certificates serve as an indicator for the consumer, so they feel safe about inputting their payment information and shipping address to an otherwise unknown or untrusted website.

Future of soft privacy technology

Mobile concern and possible solutions

mIPS - mobile Intrusion Prevention System looks to be location-aware and help protect users when utilizing future technology such as virtualized environments where the phone acts as a small virtual machine. Some cases to be wary about in the future includes stalking attacks, bandwidth stealing, attack on cloud hosts, and PA2: traffic analysis attack on the 5G device based on a confined area. Current privacy protection programs are prone to leakage and do not account for the changing of Bluetooth, locations, and LAN connections which affect how often leakage can occur. [20]

Public key-based access control

In the context of sensor net, public-key based access control (PKC) may be a good solution in the future to cover some issues in wireless access control. For sensor net, the danger from attackers includes; impersonation which grants access to malicious users, replay attack where the adversary captures sensitive information by replaying it, interleaving which selectively combines messages from previous sessions, reflection where an adversary sends an identical message to the originator similar to impersonation, forced delay which blocks communication message to be sent at a later time, and chosen-text attack where the attacker tries to extract the keys to access the sensor. The solution to this may be public key-based cryptography as a study done by Haodong Wang shows that PKC-based protocol presented is better than the traditional symmetric key in regards to memory usage, message complexity, and security resilience.[21]

Social media

Privacy management is a big part of social networks and this paper presents several solutions to this issue. For example, users of various social networks has the ability to control and specify what information they want to share to certain people based on their trust levels. Privacy concerns arise from this, for example in 2007 Facebook received complaints about their advertisements. In this instance Facebook’s partner collects information about a user and spreads it to the user’s friends without any consent. There are some proposed solutions in prototype stage by using a protocol that focuses on cryptographic and digital signature techniques to ensure the right privacy protections are in place.[22]

Massive dataset

With increasing data collection one source may have they become prone to privacy violations and a target for malicious attacks due to the abundance of personal information they hold.[23] Some solutions proposed would be to anonymize the data by building a virtual database while that anonymizes both the data provider and the subjects of the data. The proposed solution here is a new and developing technology called l-site diversity.[24]

References

- Deng, Mina; Wuyts, Kim; Scandariato, Riccardo; Preneel, Bart; Joosen, Wouter (March 2011). "A privacy threat analysis framework: supporting the elicitation and fulfillment of privacy requirements". Requirements Engineering. 16 (1): 3–32. doi:10.1007/s00766-010-0115-7. ISSN 0947-3602. S2CID 856424.

- Salama, Usama; Yao, Lina; Paik, Hye-young (June 2018). "An Internet of Things Based Multi-Level Privacy-Preserving Access Control for Smart Living". Informatics. 5 (2): 23. doi:10.3390/informatics5020023.

- "Ambient Assisted Living (AAL): Technology for independent life". GlobalRPH. Retrieved 2020-10-29.

- Yang, Pan; Xiong, Naixue; Ren, Jingli (2020). "Data Security and Privacy Protection for Cloud Storage: A Survey". IEEE Access. 8: 131723–131740. doi:10.1109/ACCESS.2020.3009876. ISSN 2169-3536.

- Ren, Hao; Li, Hongwei; Liang, Xiaohui; He, Shibo; Dai, Yuanshun; Zhao, Lian (2016-09-10). "Privacy-Enhanced and Multifunctional Health Data Aggregation under Differential Privacy Guarantees". Sensors. 16 (9): 1463. Bibcode:2016Senso..16.1463R. doi:10.3390/s16091463. ISSN 1424-8220. PMC 5038741. PMID 27626417.

- Jain, Priyank; Gyanchandani, Manasi; Khare, Nilay (2018-04-13). "Differential privacy: its technological prescriptive using big data". Journal of Big Data. 5 (1): 15. doi:10.1186/s40537-018-0124-9. ISSN 2196-1115.

- Collingwood, Lisa (2017-01-02). "Privacy implications and liability issues of autonomous vehicles". Information & Communications Technology Law. 26 (1): 32–45. doi:10.1080/13600834.2017.1269871. ISSN 1360-0834. S2CID 64010793.

- Salama, Chasel; Lee (2017). "Grabbing the Wheel Early: Moving Forward on Cybersecurity and Privacy Protections for Driverless Cars" (PDF). Informatics. 5 (2): 23. doi:10.3390/informatics5020023.

- "Auto-ISAC – Automotive Information Sharing & Analysis Center". Retrieved 2020-10-29.

- Li, Yuancheng; Zhang, Pan; Wang, Yimeng (2018-10-01). "The Location Privacy Protection of Electric Vehicles with Differential Privacy in V2G Networks". Energies. 11 (10): 2625. doi:10.3390/en11102625. ISSN 1996-1073.

- Salam, Md Iftekhar; Yau, Wei-Chuen; Chin, Ji-Jian; Heng, Swee-Huay; Ling, Huo-Chong; Phan, Raphael C-W; Poh, Geong Sen; Tan, Syh-Yuan; Yap, Wun-She (December 2015). "Implementation of searchable symmetric encryption for privacy-preserving keyword search on cloud storage". Human-centric Computing and Information Sciences. 5 (1): 19. doi:10.1186/s13673-015-0039-9. ISSN 2192-1962.

- Marshall, Emma W.; Groscup, Jennifer L.; Brank, Eve M.; Perez, Analay; Hoetger, Lori A. (2019). "Police surveillance of cell phone location data: Supreme Court versus public opinion". Behavioral Sciences & the Law. 37 (6): 751–775. doi:10.1002/bsl.2442. ISSN 1099-0798. PMID 31997422. S2CID 210945607.

- Ucci, Daniele; Perdisci, Roberto; Lee, Jaewoo; Ahamad, Mustaque (2020). "Towards a Practical Differentially Private Collaborative Phone Blacklisting System". Annual Computer Security Applications Conference. pp. 100–115. arXiv:2006.09287. doi:10.1145/3427228.3427239. ISBN 9781450388580. S2CID 227911367.

- Alshalan, Abdullah; Pisharody, Sandeep; Huang, Dijiang (2016). "A Survey of Mobile VPN Technologies". IEEE Communications Surveys & Tutorials. 18 (2): 1177–1196. doi:10.1109/COMST.2015.2496624. ISSN 1553-877X. S2CID 12028743.

- Rahimi, Sanaz; Zargham, Mehdi (2011), Butts, Jonathan; Shenoi, Sujeet (eds.), "Security Analysis of VPN Configurations in Industrial Control Environments", Critical Infrastructure Protection V, Berlin, Heidelberg: Springer Berlin Heidelberg, vol. 367, pp. 73–88, doi:10.1007/978-3-642-24864-1_6, ISBN 978-3-642-24863-4

- Sanchez-Reillo, Raul; Alonso-Moreno, Raul; Liu-Jimenez, Judith (2013), Campisi, Patrizio (ed.), "Smart Cards to Enhance Security and Privacy in Biometrics", Security and Privacy in Biometrics, London: Springer London, pp. 239–274, doi:10.1007/978-1-4471-5230-9_10, hdl:10016/34523, ISBN 978-1-4471-5229-3, retrieved 2020-10-29

- Ye, Shuiming; Luo, Ying; Zhao, Jian; Cheung, Sen-Ching S. (2009). "Anonymous Biometric Access Control". EURASIP Journal on Information Security. 2009: 1–17. doi:10.1155/2009/865259. ISSN 1687-4161. S2CID 748819.

- Wang, Mu; Xu, Changqiao; Chen, Xingyan; Hao, Hao; Zhong, Lujie; Yu, Shui (March 2019). "Differential Privacy Oriented Distributed Online Learning for Mobile Social Video Prefetching". IEEE Transactions on Multimedia. 21 (3): 636–651. doi:10.1109/TMM.2019.2892561. ISSN 1520-9210. S2CID 67870303.

- Cardoso, Sofia; Martinez, Luis F. (2019-03-01). "Online payments strategy: how third-party internet seals of approval and payment provider reputation influence the Millennials' online transactions". Electronic Commerce Research. 19 (1): 189–209. doi:10.1007/s10660-018-9295-x. ISSN 1572-9362. S2CID 62877854.

- Ulltveit-Moe, Nils; Oleshchuk, Vladimir A.; Køien, Geir M. (2011-04-01). "Location-Aware Mobile Intrusion Detection with Enhanced Privacy in a 5G Context". Wireless Personal Communications. 57 (3): 317–338. doi:10.1007/s11277-010-0069-6. hdl:11250/137763. ISSN 1572-834X. S2CID 18709976.

- Wang, Haodong; Sheng, Bo; Tan, Chiu C.; Li, Qun (July 2011). "Public-key based access control in sensornet". Wireless Networks. 17 (5): 1217–1234. doi:10.1007/s11276-011-0343-x. ISSN 1022-0038. S2CID 11526061.

- Carminati, Barbara; Ferrari, Elena (2008), Atluri, Vijay (ed.), "Privacy-Aware Collaborative Access Control in Web-Based Social Networks", Data and Applications Security XXII, Berlin, Heidelberg: Springer Berlin Heidelberg, vol. 5094, pp. 81–96, doi:10.1007/978-3-540-70567-3_7, ISBN 978-3-540-70566-6

- Al-Zobbi, Mohammed; Shahrestani, Seyed; Ruan, Chun (December 2017). "Improving MapReduce privacy by implementing multi-dimensional sensitivity-based anonymization". Journal of Big Data. 4 (1): 45. doi:10.1186/s40537-017-0104-5. ISSN 2196-1115.

- Jurczyk, Pawel; Xiong, Li (2009), Gudes, Ehud; Vaidya, Jaideep (eds.), "Distributed Anonymization: Achieving Privacy for Both Data Subjects and Data Providers", Data and Applications Security XXIII, Berlin, Heidelberg: Springer Berlin Heidelberg, vol. 5645, pp. 191–207, doi:10.1007/978-3-642-03007-9_13, ISBN 978-3-642-03006-2