Surround sound

Surround sound is a technique for enriching the fidelity and depth of sound reproduction by using multiple audio channels from speakers that surround the listener (surround channels). Its first application was in movie theaters. Prior to surround sound, theater sound systems commonly had three screen channels of sound that played from three loudspeakers (left, center, and right) located in front of the audience. Surround sound adds one or more channels from loudspeakers to the side or behind the listener that are able to create the sensation of sound coming from any horizontal direction (at ground level) around the listener.

The technique enhances the perception of sound spatialization by exploiting sound localization: a listener's ability to identify the location or origin of a detected sound in direction and distance. This is achieved by using multiple discrete audio channels routed to an array of loudspeakers.[1] Surround sound typically has a listener location (sweet spot) where the audio effects work best and presents a fixed or forward perspective of the sound field to the listener at this location.

Surround sound formats vary in reproduction and recording methods, along with the number and positioning of additional channels. The most common surround sound specification, the ITU's 5.1 standard, calls for 6 speakers: Center (C), in front of the listener; Left (L) and Right (R), at angles of 60°; Left Surround (LS) and Right Surround (RS) at angles of 100–120°; and a subwoofer, whose position is not critical.[2]

Fields of application

Though cinema and soundtracks represent the major uses of surround techniques, its scope of application is broader than that, as surround sound permits creation of an audio-environment for all sorts of purposes. Multichannel audio techniques may be used to reproduce contents as varied as music, speech, natural or synthetic sounds for cinema, television, broadcasting, or computers. In terms of music content for example, a live performance may use multichannel techniques in the context of an open-air concert, of a musical theatre performance or for broadcasting;[3] for a film, specific techniques are adapted to movie theater or to home (e.g. home cinema systems).[4] The narrative space is also a content that can be enhanced through multichannel techniques. This applies mainly to cinema narratives, for example the speech of the characters of a film,[5][6][7] but may also be applied to plays performed in a theatre, to a conference, or to integrate voice-based comments in an archeological site or monument. For example, an exhibition may be enhanced with topical ambient sound of water, birds, train or machine noise. Topical natural sounds may also be used in educational applications.[8] Other fields of application include video game consoles, personal computers and other platforms.[9][10][11][12] In such applications, the content would typically be synthetic noise produced by the computer device in interaction with its user. Significant work has also been done using surround sound for enhanced situation awareness in military and public safety application.[13]

Types of media and technologies

Commercial surround sound media include videocassettes, DVDs, and SDTV broadcasts encoded as compressed Dolby Digital and DTS, and lossless audio such as DTS HD Master Audio and Dolby TrueHD on HDTV Blu-ray Disc and HD DVD, which are identical to the studio master. Other commercial formats include the competing DVD-Audio (DVD-A) and Super Audio CD (SACD) formats, and MP3 Surround. Cinema 5.1 surround formats include Dolby Digital and DTS. Sony Dynamic Digital Sound (SDDS) is an 8 channel cinema configuration which features 5 independent audio channels across the front with two independent surround channels, and a Low-frequency effects channel. Traditional 7.1 surround speaker configuration introduces two additional rear speakers to the conventional 5.1 arrangement, for a total of four surround channels and three front channels, to create a more 360° sound field.

Most surround sound recordings are created by film production companies or video game producers; however some consumer camcorders have such capability either built-in or available separately. Surround sound technologies can also be used in music to enable new methods of artistic expression. After the failure of quadraphonic audio in the 1970s, multichannel music has slowly been reintroduced since 1999 with the help of SACD and DVD-Audio formats. Some AV receivers, stereophonic systems, and computer sound cards contain integral digital signal processors or digital audio processors to simulate surround sound from a stereophonic source (see fake stereo).

In 1967, the rock group Pink Floyd performed the first-ever surround sound concert at "Games for May", a lavish affair at London’s Queen Elizabeth Hall where the band debuted its custom-made quadraphonic speaker system.[14] The control device they had made, the Azimuth Co-ordinator, is now displayed at London's Victoria and Albert Museum, as part of their Theatre Collections gallery.[15]

History

The first documented use of surround sound was in 1940, for the Disney studio's animated film Fantasia. Walt Disney was inspired by Nikolai Rimsky-Korsakov's operatic piece Flight of the Bumblebee to have a bumblebee featured in his musical Fantasia and also sound as if it was flying in all parts of the theatre. The initial multichannel audio application was called 'Fantasound', comprising three audio channels and speakers. The sound was diffused throughout the cinema, controlled by an engineer using some 54 loudspeakers. The surround sound was achieved using the sum and the difference of the phase of the sound. However, this experimental use of surround sound was excluded from the film in later showings. In 1952, "surround sound" successfully reappeared with the film "This is Cinerama", using discrete seven-channel sound, and the race to develop other surround sound methods took off.[16][17]

In the 1950s, the German composer Karlheinz Stockhausen experimented with and produced ground-breaking electronic compositions such as Gesang der Jünglinge and Kontakte, the latter using fully discrete and rotating quadraphonic sounds generated with industrial electronic equipment in Herbert Eimert's studio at the Westdeutscher Rundfunk (WDR). Edgar Varese's Poème électronique, created for the Iannis Xenakis-designed Philips Pavilion at the 1958 Brussels World's Fair, also used spatial audio with 425 loudspeakers used to move sound throughout the pavilion.

In 1957, working with artist Jordan Belson, Henry Jacobs produced Vortex: Experiments in Sound and Light - a series of concerts featuring new music, including some of Jacobs' own, and that of Karlheinz Stockhausen, and many others - taking place in the Morrison Planetarium in Golden Gate Park, San Francisco. Sound designers commonly regard this as the origin of the (now standard) concept of "surround sound." The program was popular, and Jacobs and Belson were invited to reproduce it at the 1958 World Expo in Brussels.[18] There are also many other composers that created ground-breaking surround sound works in the same time period.

In 1978, a concept devised by Max Bell for Dolby Laboratories called "split surround" was tested with the movie Superman. This led to the 70mm stereo surround release of Apocalypse Now, which became one of the first formal releases in cinemas with three channels in the front and two in the rear.[19] There were typically five speakers behind the screens of 70mm-capable cinemas, but only the Left, Center and Right were used full-frequency, while Center-Left and Center-Right were only used for bass-frequencies (as it is currently common). The Apocalypse Now encoder/decoder was designed by Michael Karagosian, also for Dolby Laboratories. The surround mix was produced by an Oscar-winning crew led by Walter Murch for American Zoetrope. The format was also deployed in 1982 with the stereo surround release of Blade Runner.

The 5.1 version of surround sound originated in 1987 at the famous French Cabaret Moulin Rouge. A French engineer, Dominique Bertrand used a mixing board specially designed in cooperation with Solid State Logic, based on 5000 series and including six channels. Respectively: A left, B right, C centre, D left rear, E right rear, F bass. The same engineer had already achieved a 3.1 system in 1974, for the International Summit of Francophone States in Dakar, Senegal.

Creating surround sound

Surround sound is created in several ways. The first and simplest method is using a surround sound recording technique—capturing two distinct stereo images, one for the front and one for the back or by using a dedicated setup, e.g., an augmented Decca tree[20]—or mixing-in surround sound for playback on an audio system using speakers encircling the listener to play audio from different directions. A second approach is processing the audio with psychoacoustic sound localization methods to simulate a two-dimensional (2-D) sound field with headphones. A third approach, based on Huygens' principle, attempts reconstructing the recorded sound field wave fronts within the listening space; an "audio hologram" form. One form, wave field synthesis (WFS), produces a sound field with an even error field over the entire area. Commercial WFS systems, currently marketed by companies sonic emotion and Iosono, require many loudspeakers and significant computing power. The 4th approach is using three mics, one for front, one for side and one for rear, also called Double MS recording.

The Ambisonics form, also based on Huygens' principle, gives an exact sound reconstruction at the central point; however, it is less accurate away from the central point. There are many free and commercial software programs available for Ambisonics, which dominates most of the consumer market, especially musicians using electronic and computer music. Moreover, Ambisonics products are the standard in surround sound hardware sold by Meridian Audio. In its simplest form, Ambisonics consumes few resources, however this is not true for recent developments, such as Near Field Compensated Higher Order Ambisonics.[21] Some years ago it was shown that, in the limit, WFS and Ambisonics converge.[22]

Finally, surround sound can also be achieved by mastering level, from stereophonic sources as with Penteo, which uses digital signal processing analysis of a stereo recording to parse out individual sounds to component panorama positions, then positions them, accordingly, into a five-channel field. However, there are more ways to create surround sound out of stereo, for instance with the routines based on QS and SQ for encoding Quad sound, where instruments were divided over 4 speakers in the studio. This way of creating surround with software routines is normally referred to as "upmixing",[23] which was particularly successful on the Sansui QSD-series decoders that had a mode where it mapped the L ↔ R stereo onto an ∩ arc.

Standard configurations

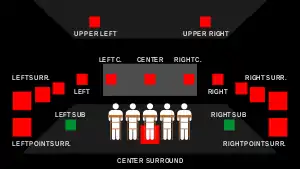

There are many alternative setups available for a surround sound experience, with a 3-2 (3 front, 2 back speakers and a Low Frequency Effects channel) configuration (more commonly referred to as 5.1 surround) being the standard for most surround sound applications, including cinema, television and consumer applications.[2][24] This is a compromise between the ideal image creation of a room and that of practicality and compatibility with two-channel stereo.[25] Because most surround sound mixes are produced for 5.1 surround (6 channels), larger setups require matrixes or processors to feed the additional speakers.[25]

The standard surround setup consists of three front speakers LCR (left, center and right), two surround speakers LS and RS (left and right surround respectively) and a subwoofer for the Low Frequency Effects (LFE) channel, that is low-pass filtered at 120 Hz. The angles between the speakers have been standardized by the ITU (International Telecommunication Union) recommendation 775 and AES (Audio Engineering Society) as follows: 60 degrees between the L and R channels (allows for two-channel stereo compatibility) with the center speaker directly in front of the listener. The Surround channels are placed 100–120 degrees from the center channel, with the subwoofer's positioning not being critical due to the low directional factor of frequencies below 120 Hz.[26] The ITU standard also allows for additional surround speakers, that need to be distributed evenly between 60 and 150 degrees.[24][26]

Surround mixes of more or fewer channels are acceptable, if they are compatible, as described by the ITU-R BS. 775-1,[2] with 5.1 surround. The 3-1 channel setup (consisting of one monophonic surround channel) is such a case, where both LS and RS are fed by the monophonic signal at an attenuated level of -3 dB.[25]

The function of the center channel is to anchor the signal so that any central panned images do not shift when a listener is moving or is sitting away from the sweet spot.[27] The center channel also prevents any timbral modifications from occurring, which is typical for 2-channel stereo, due to phase differences at the two ears of a listener.[24] The centre channel is especially used in films and television, with dialogue primarily feeding the center channel.[25] The function of the center channel can either be of a monophonic nature (as with dialogue) or it can be used in combination with the left and right channels for true three-channel stereo. Motion Pictures tend to use the center channel for monophonic purposes with stereo being reserved purely for the left and right channels. Surround microphones techniques have however been developed that fully use the potential of three-channel stereo.

In 5.1 surround, phantom images between the front speakers are quite accurate, with images towards the back and especially to the sides being unstable.[24][25] The localisation of a virtual source, based on level differences between two loudspeakers to the side of a listener, shows great inconsistency across the standardised 5.1 setup, also being largely affected by movement away from the reference position. 5.1 surround is therefore limited in its ability to convey 3D sound, making the surround channels more appropriate for ambience or effects.[24])

7.1 channel surround is another setup, most commonly used in large cinemas, that is compatible with 5.1 surround, though it is not stated in the ITU-standards. 7.1 channel surround adds two additional channels, center-left (CL) and center-right (CR) to the 5.1 surround setup, with the speakers situated 15 degrees off centre from the listener.[24] This convention is used to cover an increased angle between the front loudspeakers as a product of a larger screen.

Surround microphone techniques

Most 2-channel stereophonic microphone techniques are compatible with a 3-channel setup (LCR), as many of these techniques already contain a center microphone or microphone pair. Microphone techniques for LCR should, however, try to obtain greater channel separation to prevent conflicting phantom images between L/C and L/R for example.[25][27][28] Specialised techniques have therefore been developed for 3-channel stereo. Surround microphone techniques largely depend on the setup used, therefore being biased towards the 5.1 surround setup, as this is the standard.[24]

Surround recording techniques can be differentiated into those that use single arrays of microphones placed in close proximity, and those treating front and rear channels with separate arrays.[24][26] Close arrays present more accurate phantom images, whereas separate treatment of rear channels is usually used for ambience.[26] For accurate depiction of an acoustic environment, such as a halls, side reflections are essential. Appropriate microphone techniques should therefore be used, if room impression is important. Although the reproduction of side images are very unstable in the 5.1 surround setup, room impressions can still be accurately presented.[25]

Some microphone techniques used for coverage of three front channels, include double-stereo techniques, INA-3 (Ideal Cardioid Arrangement), the Decca Tree setup and the OCT (Optimum Cardioid Triangle).[25][28] Surround techniques are largely based on 3-channel techniques with additional microphones used for the surround channels. A distinguishing factor for the pickup of the front channels in surround is that less reverberation should be picked up, as the surround microphones will be responsible for the pickup of reverberation.[24] Cardioid, hypercardioid, or supercardioid polar patterns will therefore often replace omnidirectional polar patterns for surround recordings. To compensate for the lost low-end of directional (pressure gradient) microphones, additional omnidirectional (pressure microphones), exhibiting an extended low-end response, can be added. The microphone's output is usually low-pass filtered.[25][28] A simple surround microphone configuration involves the use of a front array in combination with two backward-facing omnidirectional room microphones placed about 10–15 meters away from the front array. If echoes are notable, the front array can be delayed appropriately. Alternatively, backward facing cardioid microphones can be placed closer to the front array for a similar reverberation pickup.[26]

The INA-5 (Ideal Cardioid Arrangement) is a surround microphone array that uses five cardioid microphones resembling the angles of the standardised surround loudspeaker configuration defined by the ITU Rec. 775.[26] Dimensions between the front three microphone as well as the polar patterns of the microphones can be changed for different pickup angles and ambient response.[24] This technique therefore allows for great flexibility.

A well established microphone array is the Fukada Tree, which is a modified variant of the Decca Tree stereo technique. The array consists of five spaced cardioid microphones, three front microphones resembling a Decca Tree and two surround microphones. Two additional omnidirectional outriggers can be added to enlarge the perceived size of the orchestra or to better integrate the front and surround channels.[24][25] The L, R, LS and RS microphones should be placed in a square formation, with L/R and LS/RS angled at 45 degrees and 135 degrees from the center microphone respectively. Spacing between these microphones should be about 1.8 meters. This square formation is responsible for the room impressions. The center channel is placed a meter in front of the L and R channels, producing a strong center image. The surround microphones are usually placed at the critical distance (where the direct and reverberant field is equal), with the full array usually situated several meters above and behind the conductor.[24][25]

The NHK (Japanese broadcasting company) developed an alternative technique also involving five cardioid microphones. Here a baffle is used for separation between the front left and right channels, which are 30 cm apart.[24] Outrigger omnidirectional microphones, low-pass filtered at 250 Hz, are spaced 3 meters apart in line with the L and R cardioids. These compensate for the bass roll-off of the cardioid microphones and also add expansiveness.[27] A 3-meter spaced microphone pair, situated 2–3 meters behind front array, is used for the surround channels.[24] The centre channel is again placed slightly forward, with the L/R and LS/RS again angled at 45 and 135 degrees respectively.

The OCT-Surround (Optimum Cardioid Triangle-Surround) microphone array is an augmented technique of the stereo OCT technique using the same front array with added surround microphones. The front array is designed for minimum crosstalk, with the front left and right microphones having supercardioid polar patterns and angled at 90 degrees relative to the center microphone.[24][25] It is important that high quality small diaphragm microphones are used for the L and R channels to reduce off-axis coloration.[26] Equalization can also be used to flatten the response of the supercardioid microphones to signals coming in at up to about 30 degrees from the front of the array.[24] The center channel is placed slightly forward. The surround microphones are backwards facing cardioid microphones, that are placed 40 cm back from the L and R microphones. The L, R, LS and RS microphones pick up early reflections from both the sides and the back of an acoustic venue, therefore giving significant room impressions.[25] Spacing between the L and R microphones can be varied to obtain the required stereo width.[25]

Specialized microphone arrays have been developed for recording purely the ambience of a space. These arrays are used in combination with suitable front arrays, or can be added to above mentioned surround techniques.[26] The Hamasaki square (also proposed by NHK) is a well established microphone array used for the pickup of hall ambience. Four figure-eight microphones are arranged in a square, ideally placed far away and high up in the hall. Spacing between the microphones should be between 1–3 meters.[25] The microphones nulls (zero pickup point) are set to face the main sound source with positive polarities outward facing, therefore very effectively minimizing the direct sound pickup as well as echoes from the back of the hall[26] The back two microphones are mixed to the surround channels, with the front two channels being mixed in combination with the front array into L and R.

Another ambient technique is the IRT (Institut für Rundfunktechnik) cross. Here, four cardioid microphones, 90 degrees relative to one another, are placed in square formation, separated by 21–25 cm.[26][28] The front two microphones should be positioned 45 degrees off axis from the sound source. This technique therefore resembles back to back near-coincident stereo pairs. The microphones outputs are fed to the L, R and LS, RS channels. The disadvantage of this approach is that direct sound pickup is quite significant.

Many recordings do not require pickup of side reflections. For Live Pop music concerts a more appropriate array for the pickup of ambience is the cardioid trapezium.[25] All four cardioid microphones are backward facing and angled at 60 degrees from one another, therefore similar to a semi-circle. This is effective for the pickup of audience and ambience.

All the above-mentioned microphone arrays take up considerable space, making them quite ineffective for field recordings. In this respect, the double MS (Mid Side) technique is quite advantageous. This array uses back to back cardioid microphones, one facing forward, the other backwards, combined with either one or two figure-eight microphone. Different channels are obtained by sum and difference of the figure-eight and cardioid patterns.[25][26] When using only one figure-eight microphone, the double MS technique is extremely compact and therefore also perfectly compatible with monophonic playback. This technique also allows for postproduction changes of the pickup angle.

Bass management

Surround replay systems may make use of bass management, the fundamental principle of which is that bass content in the incoming signal, irrespective of channel, should be directed only to loudspeakers capable of handling it, whether the latter are the main system loudspeakers or one or more special low-frequency speakers called subwoofers.

There is a notation difference before and after the bass management system. Before the bass management system there is a Low Frequency Effects (LFE) channel. After the bass management system there is a subwoofer signal. A common misunderstanding is the belief that the LFE channel is the "subwoofer channel". The bass management system may direct bass to one or more subwoofers (if present) from any channel, not just from the LFE channel. Also, if there is no subwoofer speaker present then the bass management system can direct the LFE channel to one or more of the main speakers.

Low frequency effects channel

Because the low-frequency effects (LFE) channel requires only a fraction of the bandwidth of the other audio channels, it is referred to as the .1 channel; for example 5.1 or 7.1.

The LFE channel is a source of some confusion in surround sound. It was originally developed to carry extremely low sub-bass cinematic sound effects (e.g., the loud rumble of thunder or explosions) on their own channel. This allowed theaters to control the volume of these effects to suit the particular cinema's acoustic environment and sound reproduction system. Independent control of the sub-bass effects also reduced the problem of intermodulation distortion in analog movie sound reproduction.

In the original movie theater implementation, the LFE was a separate channel fed to one or more subwoofers. Home replay systems, however, may not have a separate subwoofer, so modern home surround decoders and systems often include a bass management system that allows bass on any channel (main or LFE) to be fed only to the loudspeakers that can handle low-frequency signals. The salient point here is that the LFE channel is not the subwoofer channel; there may be no subwoofer and, if there is, it may be handling a good deal more than effects.[29]

Some record labels such as Telarc and Chesky have argued that LFE channels are not needed in a modern digital multichannel entertainment system. They argue that, given loudspeakers that have low frequency response to 30Hz, all available channels have a full-frequency range and, as such, there is no need for an LFE in surround music production, because all the frequencies are available in all the main channels. These labels sometimes use the LFE channel to carry a height channel. The label BIS Records generally uses a 5.0 channel mix.

Channel notation

Channel notation indicates the number of discrete channels encoded in the audio signal, not necessarily the number of channels reproduced for playback. The number of playback channels can be increased by using matrix decoding. The number of playback channels may also differ from the number of speakers used to reproduce them if one or more channels drives a group of speakers. Notation represents the number of channels, not the number of speakers.

The first digit in "5.1" is the number of full range channels. The ".1" reflects the limited frequency range of the LFE channel.

For example, two stereo speakers with no LFE channel = 2.0

5 full-range channels + 1 LFE channel = 5.1

An alternative notation shows the number of full-range channels in front of the listener, separated by a slash from the number of full-range channels beside or behind the listener, with a decimal point marking the number of limited-range LFE channels.

E.g. 3 front channels + 2 side channels + an LFE channel = 3/2.1

The notation can be expanded to include Matrix Decoders. Dolby Digital EX, for example, has a sixth full-range channel incorporated into the two rear channels with a matrix. This is expressed:

3 front channels + 2 rear channels + 3 channels reproduced in the rear in total + 1 LFE channel = 3/2:3.1

The term stereo, although popularised in reference to two channel audio, historically also referred to surround sound, as it strictly means "solid" (three-dimensional) sound. However this is no longer common usage and "stereo sound" almost exclusively means two channels, left and right.

Channel identification

In accordance with ANSI/CEA-863-A[30]

ANSI/CEA-863-A identification for surround sound channels Zero-based channel index Channel name Color-coding on commercial

receiver and cablingMP3/WAV/FLAC

[31][32][33][34]DTS/AAC

[35]Vorbis/Opus

[36][37]0 1 0 Front left White 1 2 2 Front right Red 2 0 1 Center Green 3 5 7 Subwoofer Purple 4 3 3 Side left Blue 5 4 4 Side right Grey 6 6 5 Rear left Brown 7 7 6 Rear right Khaki

Diagram Front left Center Front right Side left

Side right Rear left Rear right Subwoofer

Height channels Index Channel name Color-coding on commercial

receiver and cabling8 Left height 1 Yellow 9 Right height 1 Orange 10 Left height 2 Pink 11 Right height 2 Magenta

Sonic Whole Overhead Sound

In 2002, Dolby premiered a master of We Were Soldiers which featured a Sonic Whole Overhead Sound soundtrack. This mix included a new ceiling-mounted height channel.

Ambisonics

Ambisonics is a recording and playback technique using multichannel mixing that can be used live or in the studio and which recreates the soundfield as it existed in the space, in contrast to traditional surround systems, which can only create illusion of the soundfield if the listener is located in a very narrow sweetspot between speakers. Any number of speakers in any physical arrangement can be used to recreate a sound field. With 6 or more speakers arranged around a listener, a 3-dimensional ("periphonic", or full-sphere) sound field can be presented. Ambisonics was invented by Michael Gerzon.

Binaural recording

Binaural recording is a method of recording sound that uses two microphones, arranged with the intent to create the 3-D stereo experience of being present in the room with the performers or instruments. The idea of a three dimensional or "internal" form of sound has developed into technology for stethoscopes creating "in-head" acoustics and IMAX movies creating a three dimensional acoustic experience.

Panor-Ambiophonic (PanAmbio) 4.0/4.1

PanAmbio combines a stereo dipole and crosstalk cancellation in front and a second set behind the listener (total of four speakers) for 360° 2D surround reproduction. Four channel recordings, especially those containing binaural cues, create speaker-binaural surround sound. 5.1 channel recordings, including movie DVDs, are compatible by mixing C-channel content to the front speaker pair. 6.1 can be played by mixing SC to the back pair.

Standard speaker channels

Several speaker configurations are commonly used for consumer equipment. The order and identifiers are those specified for the channel mask in the standard uncompressed WAV file format (which contains a raw multichannel PCM stream) and are used according to the same specification for most PC connectible digital sound hardware and PC operating systems capable of handling multiple channels.[38][39] While it is possible to build any speaker configuration, there is little commercial movie or music content for alternative speaker configurations. However, source channels can be remixed for the speaker channels using a matrix table specifying how much of each content channel is played through each speaker channel.

| Channel name | ID | Identifier | Index | Flag |

|---|---|---|---|---|

| Front Left | FL | SPEAKER_FRONT_LEFT | 0 | 0x00000001 |

| Front Right | FR | SPEAKER_FRONT_RIGHT | 1 | 0x00000002 |

| Front Center | FC | SPEAKER_FRONT_CENTER | 2 | 0x00000004 |

| Low Frequency | LFE | SPEAKER_LOW_FREQUENCY | 3 | 0x00000008 |

| Back Left | BL | SPEAKER_BACK_LEFT | 4 | 0x00000010 |

| Back Right | BR | SPEAKER_BACK_RIGHT | 5 | 0x00000020 |

| Front Left of Center | FLC | SPEAKER_FRONT_LEFT_OF_CENTER | 6 | 0x00000040 |

| Front Right of Center | FRC | SPEAKER_FRONT_RIGHT_OF_CENTER | 7 | 0x00000080 |

| Back Center | BC | SPEAKER_BACK_CENTER | 8 | 0x00000100 |

| Side Left | SL | SPEAKER_SIDE_LEFT | 9 | 0x00000200 |

| Side Right | SR | SPEAKER_SIDE_RIGHT | 10 | 0x00000400 |

| Top Center | TC | SPEAKER_TOP_CENTER | 11 | 0x00000800 |

| Front Left Height | TFL | SPEAKER_TOP_FRONT_LEFT | 12 | 0x00001000 |

| Front Center Height | TFC | SPEAKER_TOP_FRONT_CENTER | 13 | 0x00002000 |

| Front Right Height | TFR | SPEAKER_TOP_FRONT_RIGHT | 14 | 0x00004000 |

| Rear Left Height | TBL | SPEAKER_TOP_BACK_LEFT | 15 | 0x00008000 |

| Rear Center Height | TBC | SPEAKER_TOP_BACK_CENTER | 16 | 0x00010000 |

| Rear Right Height | TBR | SPEAKER_TOP_BACK_RIGHT | 17 | 0x00020000 |

Most channel configuration may include a low frequency effects (LFE) channel (the channel played through the subwoofer.) This makes the configuration ".1" instead of ".0". Most modern multichannel mixes contain one LFE, some use two.

| Icon | System | Channels | LFE | Front | Sides | Back | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| FL+FR | FC | FLC+FRC | TFL+TFR | SL+SR | BL+BR | BC | TBL+TBR | ||||

| Mono[Note 1] | 1.0 | No | No | Yes | No | No | No | No | No | No | |

| Stereo[Note 2] | 2.0 | 2.1 | Yes | No | No | No | No | No | No | No | |

| Stereo | 3.0 | 3.1 | Yes | Yes | No | No | No | No | No | No | |

| Surround | 3.0 | No | Yes | No | No | No | No | No | Yes | No | |

| Quad | 4.0 | No | Yes | No | No | No | No | Yes | No | No | |

| Side Quad | 4.0 | No | Yes | No | No | No | Yes | No | No | No | |

| Surround | 4.0 | 4.1 | Yes | Yes | No | No | No | No | Yes | No | |

| (Front) | 5.0 | 5.1 | Yes | Yes | No | No | No | Yes | No | No | |

| Side[40] | 5.0 | 5.1 | Yes | Yes | No | No | Yes | No | No | No | |

| Atmos[41] | 5.1.4 | Yes | Yes | Yes | No | Yes | No | Yes | No | Yes | |

| Hexagonal (Back) | 6.0 | 6.1 | Yes | Yes | No | No | No | Yes | Yes | No | |

| Front | 6.0 | 6.1 | Yes | Yes | Yes | No | No | No | Yes | No | |

| (Side) | 6.0 | 6.1 | Yes | Yes | No | No | Yes | No | Yes | No | |

| Wide | 7.1 | Yes | Yes | Yes | Yes | No | No | Yes | No | No | |

| Side[40] | 7.0 | 7.1 | Yes | Yes | Yes | No | Yes | No | No | No | |

| Surround[42] | 7.0 | 7.1 | Yes | Yes | No | No | Yes | Yes | No | No | |

| Atmos[41] | 7.1.4 | Yes | Yes | Yes | No | Yes | Yes | Yes | No | Yes | |

| Octagonal | 8.0 | No | Yes | Yes | No | No | Yes | Yes | Yes | No | |

| Surround | 9.0 | 9.1 | Yes | Yes | No | Yes | Yes | Yes | No | No | |

| Surround | 11.0 | 11.1 | Yes | Yes | Yes | Yes | Yes | Yes | No | No | |

| Atmos[41] | 11.1.4 | Yes | Yes | Yes | Yes | No | Two per side | Two per side | Yes | Yes | |

7.1 surround sound

7.1 surround sound is a popular format in theaters & Home cinema including Blu-rays with Dolby and DTS being major players.[43]

7.1.2/7.1.4 immersive sound

7.1.2 and 7.1.4 immersive sound along with 5.1.2 and 5.1.4 format adds either 2 or 4 overhead speakers to enable sound objects and special effect sounds to be panned overhead for the listener. Introduced for theatrical film releases in 2012 by Dolby Laboratories under the trademark name Dolby Atmos.[44]

Dolby Atmos (and other Microsoft Spatial Sound engines; see AudioObjectType in SpatialAudioClient.h) additionally support a virtual "8.1.4.4" configuration, to be rendered by a HRTF.[45] The configuration adds to 7.1.4 with a center speaker behind the listener and 4 speakers below.[46]

10.2 surround sound

10.2 is the surround sound format developed by THX creator Tomlinson Holman of TMH Labs and University of Southern California (schools of Cinema/Television and Engineering). Developed along with Chris Kyriakakis of the USC Viterbi School of Engineering, 10.2 refers to the format's promotional slogan: "Twice as good as 5.1". Advocates of 10.2 argue that it is the audio equivalent of IMAX.

11.1 surround sound

11.1 sound is supported by BARCO with installations in theaters worldwide.[47]

22.2 surround sound

22.2 is the surround sound component of Ultra High Definition Television, developed by NHK Science & Technical Research Laboratories. As its name suggests, it uses 24 speakers. These are arranged in three layers: A middle layer of ten speakers, an upper layer of nine speakers, and a lower layer of three speakers and two sub-woofers. The system was demonstrated at Expo 2005, Aichi, Japan, the NAB Shows 2006 and 2009, Las Vegas, and the IBC trade shows 2006 and 2008, Amsterdam, Netherlands.

See also

Notes

- For historical reasons, when using (1.0) mono sound, often in technical implementations the first (left) channel is used, instead of the center speaker channel, in many other cases when playing back multichannel content on a device with a mono speaker configuration all channels are downmixed into one channel. The way standard mono and stereo plugs used for common audio devices are designed ensures this as well.

- Stereo (2.0) is still the most common format for music, as most computers, television sets and portable audio players only feature two speakers, and the red book Audio CD standard used for retail distribution of music only allows for two channels. A 2.1 speaker set does generally not have a separate physical channel for the low-frequency effects, as the speaker set downmixes the low-frequency components of the two stereo channels into one channel for the subwoofer.

References

- "Home Theater Glossary of Terms and Terminology". Audiogurus. Retrieved 28 October 2015.

- RECOMMENDATION ITU-R BS.775-1 - Multichannel stereophonic sound system with and without accompanying picture (PDF). ITU-R. 1992.

- Sawaguchi, Mick M; Fukada, Akira (1999). "Multichannel sound mixing practice for broadcasting" (PDF). IBC Conference, 1999. Archived from the original (PDF) on 2009-12-19.

- Healy, Graham; Smeaton, Alan F. (2009-05-18). "Spatially Augmented Audio Delivery: Applications of Spatial Sound Awareness in Sensor-Equipped Indoor Environments" (PDF). 2009 Tenth International Conference on Mobile Data Management: Systems, Services and Middleware. Taipei, Taiwan: Institute of Electrical and Electronics Engineers. pp. 704–708. doi:10.1109/MDM.2009.120. ISBN 978-1-4244-4153-2. S2CID 1627248.

- Manolas, Christos; Pauletto, Sandra (2009-08-01). "Enlarging the Diegetic Space: Uses of the Multi-channel Soundtrack in Cinematic Narrative". Soundtrack, the. 2 (1): 39–55. doi:10.1386/st.2.1.39_1. ISSN 1751-4193.

- Anstey, Josephine; Pape, Dave; Sandin, Daniel J. (2000-05-03). "Building a VR narrative". In Merritt, John O.; Benton, Stephen A.; Woods, Andrew J.; Bolas, Mark T. (eds.). Stereoscopic Displays and Virtual Reality Systems VII. Vol. 3957. pp. 370–379. doi:10.1117/12.384463. S2CID 110825093.

- Kerins, Mark (2006). "Narration in the Cinema of Digital Sound". The Velvet Light Trap. 58 (1): 41–54. doi:10.1353/vlt.2006.0030. ISSN 1542-4251. S2CID 190599052.

- Dantzker, Marc S. (2004), Acoustics in the Cetaceans Environment: A Multimedia Educational Package (PDF)

- Gärdenfors, Dan (2003). "Designing sound-based computer games". Digital Creativity. 14 (2): 111–114. doi:10.1076/digc.14.2.111.27863. S2CID 1554199.

- Roden, Timothy; Parberry, Ian (2005-06-15). "Designing a narrative-based audio only 3D game engine". Proceedings of the 2005 ACM SIGCHI International Conference on Advances in computer entertainment technology. ACE '05. New York, NY, USA: Association for Computing Machinery. pp. 274–277. CiteSeerX 10.1.1.552.5977. doi:10.1145/1178477.1178525. ISBN 978-1-59593-110-8. S2CID 11069976.

- Schütze, Stephan (August 2003). "The creation of an audio environment as part of a computer game world: the design for Jurassic Park – Operation Genesis on the XBOX™ as a broad concept for surround installation creation". Organised Sound. 8 (2): 171–180. doi:10.1017/S1355771803000074. ISSN 1469-8153. S2CID 62690122.

- MJones, Mike (2000), Composing Space: Cinema and Computer Gaming. The Macro-Mise En Scene and Spatial Composition (PDF), archived from the original (PDF) on 2013-05-12

- Begault, Durand; et al. (2005). "Audio-Visual Communication Monitoring System for Enhanced Situational Awareness" (PDF).

- Calore, Michael (May 12, 1967). "Pink Floyd Astounds With 'Sound in the Round'". WIRED.

- "pink floyd". Retrieved 2009-08-14.

- Tomlinson, Holman (2007). Surround sound: up and running. Focal Press. p. 3,4. ISBN 978-0-240-80829-1. Retrieved 2010-04-03.

- Torick, Emil (1998-02-01). "Highlights in the History of Multichannel Sound". Journal of the Audio Engineering Society. 46 (1/2): 27–31.

- Henry Jacobs

- Dienstfrey, Eric (2016). "The Myth of the Speakers: A Critical Reexamination of Dolby History". Film History. Film History: An International Journal. 28 (1): 167–193. doi:10.2979/filmhistory.28.1.06. JSTOR 10.2979/filmhistory.28.1.06. S2CID 192940527.

- "Ron Steicher (2003): The DECCA Tree—it's not just for stereo any more" (PDF). Archived from the original (PDF) on July 19, 2011.

- "Spatial Sound Encoding Including Near Field Effect: Introducing Distance Coding Filters and a Viable, New Ambisonic Format" (PDF).

- "Further Investigations of High Order Ambisonics and Wavefield Synthesis for Holophonic Sound Imaging". Archived from the original on 14 December 2001. Retrieved 24 October 2016.

- "DTSAC3". Archived from the original on 2010-02-27.

- Rumsey, Francis; McCormick, Tim (2009). Sound And Recording (Sixth ed.). Oxford: Focal Press.

- Wöhr, Martin; Dickreiter, Michael; Dittel, Volker; et al., eds. (2008). Handbuch der Tonstudiotechnik Band 1 (Seventh ed.). Munich: K.G Saur.

- Holman, Tomlinson (2008). Surround Sound: Up and Running (Second ed.). Oxford: Focal Press.

- Bartlett, Bruce; Bartlett, Jenny (1999). On-Location Recording Techniques. Focal Press.

- Eargle, John (2005). The Microphone Book (Second ed.). Oxford: Focal Press.

- Multichannel Music Mixing (PDF), Dolby Laboratories, Inc., archived from the original (PDF) on 2007-02-26

- "Consumer Electronics Association standards: Setup and Connection" (PDF). Archived from the original (PDF) on 2009-09-30.

- "Updated: Player 6.3.1 with mp3 Surround support now available!". Archived from the original on 2011-07-10.

- "Windows Media". windows.microsoft.com. Microsoft. Retrieved 28 October 2015.

- "Multiple channel audio data and WAVE files". Microsoft. June 2017.

- Josh Coalson. "FLAC - format".

- ["Hydrogenaudio, 5.1 Channel Mappings". Archived from the original on 2015-06-18.

- "Vorbis I specification". Xiph.Org Foundation. 2015-02-27.

- Terriberry, T.; Lee, R.; Giles, R. (2016). "Channel Mapping Family 1". Ogg Encapsulation for the Opus Audio Codec. p. 18. sec. 5.1.1.2. doi:10.17487/RFC7845. RFC 7845.

- "KSAUDIO_CHANNEL_CONFIG structure". Microsoft. 13 March 2023.

- "Header file for OpenSL, containing various identifier definitions".

- "THX 5.1 Surround Sound Speaker set-up". Archived from the original on 2010-05-28.. This is the correct speaker placement for 5.0/6.0/7.0 channel sound reproduction for Dolby and Digital Theater Systems.

- "Dolby Atmos Speaker Setup Guides". www.dolby.com.

- "Sony Print Master Guidelines" (PDF). Archived from the original (PDF) on 2012-03-07This plus an LFE is the correct speaker placement for 8-track Sony Dynamic Digital Sound.

{{cite web}}: CS1 maint: postscript (link) - "The next big things in home-theater: Dolby Atmos and DTS:X explained". 2015-10-30. Retrieved 24 October 2016.

- "Dolby Atmos for Home". www.dolby.com.

- "Spatial Sound for app developers for Windows, Xbox, and Hololens 2 - Win32 apps". learn.microsoft.com. 27 April 2023.

- "Deeply Immersive Game Audio with Spatial Sound". games.dolby.com.

- "How Does Immersive Sound from Barco Work?". Archived from the original on 2015-11-18. Retrieved 2015-11-01.