Total variation distance of probability measures

In probability theory, the total variation distance is a distance measure for probability distributions. It is an example of a statistical distance metric, and is sometimes called the statistical distance, statistical difference or variational distance.

Definition

Consider a measurable space and probability measures and defined on . The total variation distance between and is defined as:[1]

Informally, this is the largest possible difference between the probabilities that the two probability distributions can assign to the same event.

Properties

The total variation distance is an f-divergence and an integral probability metric.

Relation to other distances

The total variation distance is related to the Kullback–Leibler divergence by Pinsker’s inequality:

One also has the following inequality, due to Bretagnolle and Huber[2] (see, also, Tsybakov[3]), which has the advantage of providing a non-vacuous bound even when :

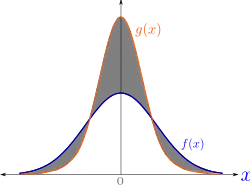

The total variation distance is half of the L1 distance between the probability functions: on discrete domains this is the distance between probability mass functions[4] , The relationship holds more generally as well[5]: when the distributions have standard probability density functions p and q, or the analogous distance between Radon-Nikodym derivatives with any common dominating measure.

The total variation distance is related to the Hellinger distance as follows:[6]

These inequalities follow immediately from the inequalities between the 1-norm and the 2-norm.

Connection to transportation theory

The total variation distance (or half the norm) arises as the optimal transportation cost, when the cost function is , that is,

where the expectation is taken with respect to the probability measure on the space where lives, and the infimum is taken over all such with marginals and , respectively.[7]

References

- Chatterjee, Sourav. "Distances between probability measures" (PDF). UC Berkeley. Archived from the original (PDF) on July 8, 2008. Retrieved 21 June 2013.

- Bretagnolle, J.; Huber, C, Estimation des densités: risque minimax, Séminaire de Probabilités, XII (Univ. Strasbourg, Strasbourg, 1976/1977), pp. 342–363, Lecture Notes in Math., 649, Springer, Berlin, 1978, Lemma 2.1 (French).

- Tsybakov, Alexandre B., Introduction to nonparametric estimation, Revised and extended from the 2004 French original. Translated by Vladimir Zaiats. Springer Series in Statistics. Springer, New York, 2009. xii+214 pp. ISBN 978-0-387-79051-0, Equation 2.25.

- David A. Levin, Yuval Peres, Elizabeth L. Wilmer, Markov Chains and Mixing Times, 2nd. rev. ed. (AMS, 2017), Proposition 4.2, p. 48.

- Tsybakov, Aleksandr B. (2009). Introduction to nonparametric estimation (rev. and extended version of the French Book ed.). New York, NY: Springer. Lemma 2.1. ISBN 978-0-387-79051-0.

- Harsha, Prahladh (September 23, 2011). "Lecture notes on communication complexity" (PDF).

- Villani, Cédric (2009). Optimal Transport, Old and New. Grundlehren der mathematischen Wissenschaften. Vol. 338. Springer-Verlag Berlin Heidelberg. p. 10. doi:10.1007/978-3-540-71050-9. ISBN 978-3-540-71049-3.