Uniform integrability

In mathematics, uniform integrability is an important concept in real analysis, functional analysis and measure theory, and plays a vital role in the theory of martingales.

Measure-theoretic definition

Uniform integrability is an extension to the notion of a family of functions being dominated in which is central in dominated convergence. Several textbooks on real analysis and measure theory use the following definition:[1][2]

Definition A: Let be a positive measure space. A set is called uniformly integrable if , and to each there corresponds a such that

whenever and

Definition A is rather restrictive for infinite measure spaces. A more general definition[3] of uniform integrability that works well in general measures spaces was introduced by G. A. Hunt.

Definition H: Let be a positive measure space. A set is called uniformly integrable if and only if

where .

For finite measure spaces the following result[4] follows from Definition H:

Theorem 1: If is a (positive) finite measure space, then a set is uniformly integrable if and only if

Many textbooks in probability present Theorem 1 as the definition of uniform integrability in Probability spaces. When the space is -finite, Definition H yields the following equivalency:

Theorem 2: Let be a -finite measure space, and be such that almost surely. A set is uniformly integrable if and only if , and for any , there exits such that

whenever .

In particular, the equivalence of Definitions A and H for finite measures follows immediately from Theorem 2; for this case, the statement in Definition A is obtained by taking in Theorem 2.

Probability definition

In the theory of probability, Definition A or the statement of Theorem 1 are often presented as definitions of uniform integrability using the notation expectation of random variables.,[5][6][7] that is,

1. A class of random variables is called uniformly integrable if:

- There exists a finite such that, for every in , and

- For every there exists such that, for every measurable such that and every in , .

or alternatively

2. A class of random variables is called uniformly integrable (UI) if for every there exists such that , where is the indicator function .

Tightness and uniform integrability

One consequence of uniformly integrability of a class of random variables is that family of laws or distributions is tight. That is, for each , there exists such that

for all .[8]

This however, does not mean that the family of measures is tight. (In any case, tightness would require a topology on in order to be defined.)

Uniform absolute continuity

There is another notion of uniformity, slightly different than uniform integrability, which also has many applications in probability and measure theory, and which does not require random variables to have a finite integral[9]

Definition: Suppose is a probability space. A classed of random variables is uniformly absolutely continuous with respect to if for any , there is such that whenever .

It is equivalent to uniform integrability if the measure is finite and has no atoms.

The term "uniform absolute continuity" is not standard, but is used by some authors.[10][11]

Related corollaries

The following results apply to the probabilistic definition.[12]

- Definition 1 could be rewritten by taking the limits as

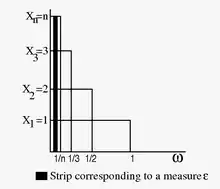

- A non-UI sequence. Let , and define Clearly , and indeed for all n. However,and comparing with definition 1, it is seen that the sequence is not uniformly integrable.

- By using Definition 2 in the above example, it can be seen that the first clause is satisfied as norm of all s are 1 i.e., bounded. But the second clause does not hold as given any positive, there is an interval with measure less than and for all .

- If is a UI random variable, by splitting and bounding each of the two, it can be seen that a uniformly integrable random variable is always bounded in .

- If any sequence of random variables is dominated by an integrable, non-negative : that is, for all ω and n, then the class of random variables is uniformly integrable.

- A class of random variables bounded in () is uniformly integrable.

Relevant theorems

In the following we use the probabilistic framework, but regardless of the finiteness of the measure, by adding the boundedness condition on the chosen subset of .

- Dunford–Pettis theorem[13][14]A class of random variables is uniformly integrable if and only if it is relatively compact for the weak topology .

- de la Vallée-Poussin theorem[15][16]The family is uniformly integrable if and only if there exists a non-negative increasing convex function such that

Relation to convergence of random variables

A sequence converges to in the norm if and only if it converges in measure to and it is uniformly integrable. In probability terms, a sequence of random variables converging in probability also converge in the mean if and only if they are uniformly integrable.[17] This is a generalization of Lebesgue's dominated convergence theorem, see Vitali convergence theorem.

Citations

- Rudin, Walter (1987). Real and Complex Analysis (3 ed.). Singapore: McGraw–Hill Book Co. p. 133. ISBN 0-07-054234-1.

- Royden, H.L. & Fitzpatrick, P.M. (2010). Real Analysis (4 ed.). Boston: Prentice Hall. p. 93. ISBN 978-0-13-143747-0.

- Hunt, G. A. (1966). Martingales et Processus de Markov. Paris: Dunod. p. 254.

- Klenke, A. (2008). Probability Theory: A Comprehensive Course. Berlin: Springer Verlag. pp. 134–137. ISBN 978-1-84800-047-6.

- Williams, David (1997). Probability with Martingales (Repr. ed.). Cambridge: Cambridge Univ. Press. pp. 126–132. ISBN 978-0-521-40605-5.

- Gut, Allan (2005). Probability: A Graduate Course. Springer. pp. 214–218. ISBN 0-387-22833-0.

- Bass, Richard F. (2011). Stochastic Processes. Cambridge: Cambridge University Press. pp. 356–357. ISBN 978-1-107-00800-7.

- Gut 2005, p. 236.

- Bass 2011, p. 356.

- Benedetto, J. J. (1976). Real Variable and Integration. Stuttgart: B. G. Teubner. p. 89. ISBN 3-519-02209-5.

- Burrill, C. W. (1972). Measure, Integration, and Probability. McGraw-Hill. p. 180. ISBN 0-07-009223-0.

- Gut 2005, pp. 215–216.

- Dunford, Nelson (1938). "Uniformity in linear spaces". Transactions of the American Mathematical Society. 44 (2): 305–356. doi:10.1090/S0002-9947-1938-1501971-X. ISSN 0002-9947.

- Dunford, Nelson (1939). "A mean ergodic theorem". Duke Mathematical Journal. 5 (3): 635–646. doi:10.1215/S0012-7094-39-00552-1. ISSN 0012-7094.

- Meyer, P.A. (1966). Probability and Potentials, Blaisdell Publishing Co, N. Y. (p.19, Theorem T22).

- Poussin, C. De La Vallee (1915). "Sur L'Integrale de Lebesgue". Transactions of the American Mathematical Society. 16 (4): 435–501. doi:10.2307/1988879. hdl:10338.dmlcz/127627. JSTOR 1988879.

- Bogachev, Vladimir I. (2007). Measure Theory Volume I. Berlin Heidelberg: Springer-Verlag. p. 268. doi:10.1007/978-3-540-34514-5_4. ISBN 978-3-540-34513-8.

References

- Shiryaev, A.N. (1995). Probability (2 ed.). New York: Springer-Verlag. pp. 187–188. ISBN 978-0-387-94549-1.

- Diestel, J. and Uhl, J. (1977). Vector measures, Mathematical Surveys 15, American Mathematical Society, Providence, RI ISBN 978-0-8218-1515-1