Video synopsis

Video synopsis is a method for automatically synthesizing a short, informative summary of a video. Unlike traditional video summarization, the synopsis is not just composed of frames from the original video.[1] The algorithm detects, tracks and analyzes moving objects (also called events) in a database of objects and activities.[2] The final output is a new, short video clip in which objects and activities that originally occurred at different times are displayed simultaneously, so as to convey information in the shortest possible time. Video synopsis has specific applications in the field of video analytics and video surveillance where, despite technological advancements and increased growth in the deployment of CCTV (closed circuit television) cameras,[3] viewing and analysis of recorded footage is still a costly labor-intensive and time-intensive task.

Technology overview

Video synopsis combines a visual summary of stored video together with an indexing mechanism.

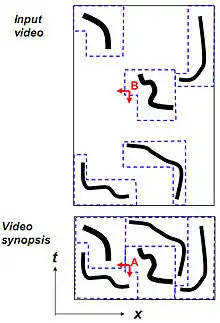

When a summary is required, all objects from the target period are collected and shifted in time to create a much shorter synopsis video showing maximum activity. A synopsis video clip is generated, in real time, in which objects and activities that originally occurred in different times are displayed simultaneously.[4]

The process begins by detecting and tracking objects of interest. Each object is represented as a tube in space-time of all video frames. Objects are detected and stored in a database in approximately real time.

Following a request to summarize a time period, all objects from the desired time are extracted from the database, and indexed to create a much shorter summary video containing maximum activity.

Real time rendering is used to generate the summary video after object re-timing. This allows end-user control over object/event density.

Video synopsis technology was invented by Prof. Shmuel Peleg[5] of The Hebrew University of Jerusalem, Israel, and is being developed under commercial license by BriefCam, Ltd.[6] BriefCam received a license to use the technology from Yissum which is the owner of the patents registered for the technology. In May 2018, BriefCam was acquired by Japanese digital imaging giant, Canon Inc., for an estimated $90 million.[7] Investors in the company include Motorola Solutions Venture Capital, Aviv Venture Capital, and OurCrowd.[8]

.jpg.webp)

Recent advances

Recent advances in the field of video synopsis have resulted in methods that focus in collecting key-points(or frames) from the long uncut video and presenting them as a chain of key events that summarize the video. This is only one of the many methods employed in modern literature to perform this task.[9] Recently, these event-driven methods have focused on correlating objects in frames, but in a more semantically related way that has been called a story-driven method of summarizing video. These methods have been shown to work well for egocentric[10] settings where the video is basically a point-of-view perspective of a single person or a group of people.

Classification

Video synopsis techniques have a number of standardized properties in common, which can be quantified as follows: (a) the video synopsis should contain the maximum activity with the least redundancy; (b) the chronological order and spatial consistency of objects in space and time must be preserved; (c) in the resultant synopsis video, there must be minimal collision; and (d) the synopsis video must be smooth and able to permit viewing without losing the region of interest.[11]

- Keyframe-Based Synopsis

- Object-Based Synopsis

- Action-Based Synopsis

- Collision Graph-Based Synopsis

- Abnormal Content-Based Synopsis

See also

References

- Mademlis, Ioannis; Tefas, Anastasios; Pitas, Ioannis (2018). "A salient dictionary learning framework for activity video summarization via key-frame extraction". Information Sciences. Elsevier. 432: 319–331. doi:10.1016/j.ins.2017.12.020. Retrieved 4 December 2022.

- Y. Pritch, S. Ratovitch, A. Hendel, and S. Peleg, Clustered Synopsis of Surveillance Video, 6th IEEE Int. Conf. on Advanced Video and Signal Based Surveillance (AVSS'09), Genoa, Italy, Sept. 2-4, 2009

- Y. Pritch, A. Rav-Acha, A. Gutman, and S. Peleg, Webcam Synopsis: Peeking Around the World, ICCV'07, October 2007. 8p.

- Y. Pritch, A. Rav-Acha, and S. Peleg, Nonchronological Video Synopsis and Indexing, IEEE Trans. PAMI, Vol 30, No 11, Nov. 2008, pp. 1971-1984.

- A. Rav-Acha, Y. Pritch, and S. Peleg, Making a Long Video Short: Dynamic Video Synopsis, CVPR'06, June 2006, pp. 435-441.

- S. Peleg, Y. Caspi, BriefCam White Paper

- Yablonko, Yasmin (9 May 2018). "Canon buys Israeli video solutions co BriefCam for $90m". Globes (in Hebrew). Retrieved 2018-12-05.

- CB Insights. "BriefCam Funding & Investors - CB Insights". www.cbinsights.com. Retrieved 2018-12-05.

- Muhammad Ajmal, Muhammad Husnain Ashraf, Muhammad Shakir, Yasir Abbas, Faiz Ali Shah, Video Summarization: Techniques and Classification

- Zheng Lu, Kristen Grauman Story-driven summarization for egocentric video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition - 2013.

- Ingle, P.Y. and Kim, Y.G., 2023. Video Synopsis Algorithms and Framework: A Survey and Comparative Evaluation. Systems, 11(2), p.108.

Patents

- US Patent 8,311,277 - Method and System for Video Indexing and Video Synopsis. Also Australian Patent AU2007345938. Filed in 9 additional countries

- US Patent 8,102,406 - Method and System for Producing a Video Synopsis. Also European Patent EP1955205 and Japanese Patent JP4972095. Filed in 7 additional countries

- US Patent 7,852,370 - Method and System for Spatio-Temporal Video Warping. Also Israeli Patent IL182006. Filed in Europe: PCT/IL2005/001150

- The owner of the patents are Yissum which gave a License to BriefCam to use the technology.

External links

- Video Synopsis and Indexing project Further information, published papers and video clips from the School of Computer Science, The Hebrew University of Jerusalem

- BriefCam company website Includes demonstration video on using video synopsis technology.

- Yissum's Website

- Video Synopsis paper in Hebrew - 2008

- Demo video for paper: Story-Driven Summarization for Egocentric Video - Zheng Lu and Kristen Grauman - CVPR 2013