Binaural fusion

Binaural fusion or binaural integration is a cognitive process that involves the combination of different auditory information presented binaurally, or to each ear. In humans, this process is essential in understanding speech as one ear may pick up more information about the speech stimuli than the other.

The process of binaural fusion is important for computing the location of sound sources in the horizontal plane (sound localization), and it is important for sound segregation.[1] Sound segregation refers the ability to identify acoustic components from one or more sound sources.[2] The binaural auditory system is highly dynamic and capable of rapidly adjusting tuning properties depending on the context in which sounds are heard. Each eardrum moves one-dimensionally; the auditory brain analyzes and compares movements of both eardrums to extract physical cues and synthesize auditory objects.[3]

When stimulation from a sound reaches the ear, the eardrum deflects in a mechanical fashion, and the three middle ear bones (ossicles) transmit the mechanical signal to the cochlea, where hair cells transform the mechanical signal into an electrical signal. The auditory nerve, also called the cochlear nerve, then transmits action potentials to the central auditory nervous system.[3]

In binaural fusion, inputs from both ears integrate and fuse to create a complete auditory picture at the brainstem. Therefore, the signals sent to the central auditory nervous system are representative of this complete picture, integrated information from both ears instead of a single ear.

The binaural squelch effect is a result of nuclei of the brainstem processing timing, amplitude, and spectral differences between the two ears. Sounds are integrated and then separated into auditory objects. For this effect to take place, neural integration from both sides is required.[4]

Anatomy

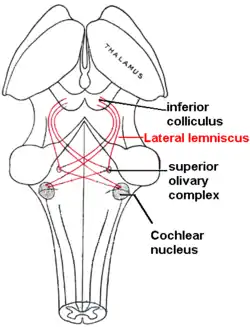

As sound travels into the inner eardrum of vertebrate mammals, it encounters the hair cells that line the basilar membrane of the cochlea in the inner ear.[5] The cochlea receives auditory information to be binaurally integrated. At the cochlea, this information is converted into electrical impulses that travel by means of the cochlear nerve, which spans from the cochlea to the ventral cochlear nucleus, which is located in the pons of the brainstem.[6] The lateral lemniscus projects from the cochlear nucleus to the superior olivary complex (SOC), a set of brainstem nuclei that consists primarily of two nuclei, the medial superior olive (MSO) and the lateral superior olive (LSO), and is the major site of binaural fusion. The subdivision of the ventral cochlear nucleus that concerns binaural fusion is the anterior ventral cochlear nucleus (AVCN).[3] The AVCN consists of spherical bushy cells and globular bushy cells and can also transmit signals to the medial nucleus of the trapezoid body (MNTB), whose neuron projects to the MSO. Transmissions from the SOC travel to the inferior colliculus (IC) via the lateral lemniscus. At the level of the IC, binaural fusion is complete. The signal ascends to the thalamocortical system, and sensory inputs to the thalamus are then relayed to the primary auditory cortex.[3][7][8][9]

Function

The ear functions to analyze and encode a sound’s dimensions.[10] Binaural fusion is responsible for avoiding the creation of multiple sound images from a sound source and its reflections. The advantages of this phenomenon are more noticeable in small rooms, decreasing as the reflective surfaces are placed farther from the listener.[11]

Central auditory system

The central auditory system converges inputs from both ears (inputs contain no explicit spatial information) onto single neurons within the brainstem. This system contains many subcortical sites that have integrative functions. The auditory nuclei collect, integrate, and analyze afferent supply,[10] the outcome is a representation of auditory space.[3] The subcortical auditory nuclei are responsible for extraction and analysis of dimensions of sounds.[10]

The integration of a sound stimulus is a result of analyzing frequency (pitch), intensity, and spatial localization of the sound source.[12] Once a sound source has been identified, the cells of lower auditory pathways are specialized to analyze physical sound parameters.[3] Summation is observed when the loudness of a sound from one stimulus is perceived as having been doubled when heard by both ears instead of only one. This process of summation is called binaural summation and is the result of different acoustics at each ear, depending on where sound is coming from.[4]

The cochlear nerve spans from the cochlea of the inner ear to the ventral cochlear nuclei located in the pons of the brainstem, relaying auditory signals to the superior olivary complex where it is to be binaurally integrated.

Medial superior olive and lateral superior olive

The MSO contains cells that function in comparing inputs from the left and right cochlear nuclei.[13] The tuning of neurons in the MSO favors low frequencies, whereas those in the LSO favor high frequencies.[14]

GABAB receptors in the LSO and MSO are involved in balance of excitatory and inhibitory inputs. The GABAB receptors are coupled to G proteins and provide a way of regulating synaptic efficacy. Specifically, GABAB receptors modulate excitatory and inhibitory inputs to the LSO.[3] Whether the GABAB receptor functions as excitatory or inhibitory for the postsynaptic neuron, depends on the exact location and action of the receptor.[1]

Sound localization

Sound localization is the ability to correctly identify the directional location of sounds. A sound stimulus localized in the horizontal plane is called azimuth; in the vertical plane it is referred to as elevation. The time, intensity, and spectral differences in the sound arriving at the two ears are used in localization. Localization of low frequency sounds is accomplished by analyzing interaural time difference (ITD). Localization of high frequency sounds is accomplished by analyzing interaural level difference (ILD).[4]

Mechanism

Binaural hearing

Action potentials originate in the hair cells of the cochlea and propagate to the brainstem; both the timing of these action potentials and the signal they transmit provide information to the SOC about the orientation of sound in space. The processing and propagation of action potentials is rapid, and therefore, information about the timing of the sounds that were heard, which is crucial to binaural processing, is conserved.[15] Each eardrum moves in one dimension, and the auditory brain analyzes and compares the movements of both eardrums in order to synthesize auditory objects.[3] This integration of information from both ears is the essence of binaural fusion. The binaural system of hearing involves sound localization in the horizontal plane, contrasting with the monaural system of hearing, which involves sound localization in the vertical plane.[3]

Superior olivary complex

The primary stage of binaural fusion, the processing of binaural signals, occurs at the SOC, where afferent fibers of the left and right auditory pathways first converge. This processing occurs because of the interaction of excitatory and inhibitory inputs in the LSO and MSO.[1][3][13] The SOC processes and integrates binaural information, in the form of ITD and ILD, entering the brainstem from the cochleae. This initial processing of ILD and ITD is regulated by GABAB receptors.[1]

ITD and ILD

The auditory space of binaural hearing is constructed based on the analysis of differences in two different binaural cues in the horizontal plane: sound level, or ILD, and arrival time at the two ears, or ITD, which allow for the comparison of the sound heard at each eardrum.[1][3] ITD is processed in the MSO and results from sounds arriving earlier at one ear than the other; this occurs when the sound does not arise from directly in front or directly behind the hearer. ILD is processed in the LSO and results from the shadowing effect that is produced at the ear that is farther from the sound source. Outputs from the SOC are targeted to the dorsal nucleus of the lateral lemniscus as well as the IC.[3]

Lateral superior olive

LSO neurons are excited by inputs from one ear and inhibited by inputs from the other, and are therefore referred to as IE neurons. Excitatory inputs are received at the LSO from spherical bushy cells of the ipsilateral cochlear nucleus, which combine inputs coming from several auditory nerve fibers. Inhibitory inputs are received at the LSO from globular bushy cells of the contralateral cochlear nucleus.[3]

Medial superior olive

MSO neurons are excited bilaterally, meaning that they are excited by inputs from both ears, and they are therefore referred to as EE neurons.[3] Fibers from the left cochlear nucleus terminate on the left of MSO neurons, and fibers from the right cochlear nucleus terminate on the right of MSO neurons.[13] Excitatory inputs to the MSO from spherical bushy cells are mediated by glutamate, and inhibitory inputs to the MSO from globular bushy cells are mediated by glycine. MSO neurons extract ITD information from binaural inputs and resolve small differences in the time of arrival of sounds at each ear.[3] Outputs from the MSO and LSO are sent via the lateral lemniscus to the IC, which integrates the spatial localization of sound. In the IC, acoustic cues have been processed and filtered into separate streams, forming the basis of auditory object recognition.[3]

Binaural fusion abnormalities in autism

Current research is being performed on the dysfunction of binaural fusion in individuals with autism. The neurological disorder autism is associated with many symptoms of impaired brain function, including the degradation of hearing, both unilateral and bilateral.[16] Individuals with autism who experience hearing loss maintain symptoms such as difficulty listening to background noise and impairments in sound localization. Both the ability to distinguish particular speakers from background noise and the process of sound localization are key products of binaural fusion. They are particularly related to the proper function of the SOC, and there is increasing evidence that morphological abnormalities within the brainstem, namely in the SOC, of autistic individuals are a cause of the hearing difficulties.[17] The neurons of the MSO of individuals with autism display atypical anatomical features, including atypical cell shape and orientation of the cell body as well as stellate and fusiform formations.[18] Data also suggests that neurons of the LSO and MNTB contain distinct dysmorphology in autistic individuals, such as irregular stellate and fusiform shapes and a smaller than normal size. Moreover, a significant depletion of SOC neurons is seen in the brainstem of autistic individuals. All of these structures play a crucial role in the proper functioning of binaural fusion, so their dysmorphology may be at least partially responsible for the incidence of these auditory symptoms in autistic patients.[17]

References

- Grothe, Benedikt; Koch, Ursula (2011). "Dynamics of binaural processing in the mammalian sound localization pathway--the role of GABA(B) receptors". Hearing Research. 279 (1–2): 43–50. doi:10.1016/j.heares.2011.03.013. PMID 21447375. S2CID 7196476.

- Schwartz, Andrew; McDermott, Josh (2012). "Spatial cues alone produce inaccurate sound segregation: The effect of inter aural time differences". Journal of the Acoustical Society of America. 132 (1): 357–368. Bibcode:2012ASAJ..132..357S. doi:10.1121/1.4718637. PMC 3407160. PMID 22779483.

- Grothe, Benedikt; Pecka, Michael; McAlpine, David (2010). "Mechanisms of sound localization in mammals". Physiol Rev. 90 (3): 983–1012. doi:10.1152/physrev.00026.2009. PMID 20664077.

- Tyler, R.S.; Dunn, C.C.; Witt, S.A.; Preece, J.P. (2003). "Update on bilateral cochlear implantation". Current Opinion in Otolaryngology & Head and Neck Surgery. 11 (5): 388–393. doi:10.1097/00020840-200310000-00014. PMID 14502072. S2CID 7209119.

- Lim, DJ (1980). "Cochlear anatomy related to cochlear micromechanics. A review". J. Acoust. Soc. Am. 67 (5): 1686–1695. Bibcode:1980ASAJ...67.1686L. doi:10.1121/1.384295. PMID 6768784.

- Moore, JK (2000). "Organization of the human superior olivary complex". Microsc Res Tech. 51 (4): 403–412. doi:10.1002/1097-0029(20001115)51:4<403::AID-JEMT8>3.0.CO;2-Q. PMID 11071722. S2CID 10151612.

- Cant, Nell B; Benson, Christina G (2003). "Parallel auditory pathways: projection patterns of the different neuronal populations in the dorsal and ventral cochlear nuclei". Brain Research Bulletin. 60 (5–6): 457–474. doi:10.1016/s0361-9230(03)00050-9. PMID 12787867. S2CID 42563918.

- Herrero, Maria-Trinidad; Barcia, Carlos; Navarro, Juana Mari (2002). "Functional anatomy of thalamus and basal ganglia". Child's Nerv Syst. 18 (8): 386–404. doi:10.1007/s00381-002-0604-1. PMID 12192499. S2CID 8237423.

- Twefik, Ted L (2019-10-19). "Auditory System Anatomy".

{{cite journal}}: Cite journal requires|journal=(help) - Masterton, R.B. (1992). "Role of the central auditory system in hearing: the new direction". Trends in Neurosciences. 15 (8): 280–285. doi:10.1016/0166-2236(92)90077-l. PMID 1384196. S2CID 4024835.

- Litovsky, R.; Colburn, H.; Yost, W. (1999). "The Precedence Effect". Journal of the Acoustical Society of America. 106 (4 Pt 1): 1633–1654. Bibcode:1999ASAJ..106.1633L. doi:10.1121/1.427914. PMID 10530009.

- Simon, E.; Perrot, E.; Mertens, P. (2009). "Functional anatomy of the cochlear nerve and the central auditory system". Neurochirurgie. 55 (2): 120–126. doi:10.1016/j.neuchi.2009.01.017. PMID 19304300.

- Eldredge, D.H.; Miller, J.D. (1971). "Physiology of hearing". Annu. Rev. Physiol. 33: 281–310. doi:10.1146/annurev.ph.33.030171.001433. PMID 4951051.

- Guinan, JJ; Norris, BE; Guinan, SS (1972). "Single auditory units in the superior olivary complex II: Locations of unit categories and tonotopic organization". Int J Neurosci. 4 (4): 147‐166. doi:10.3109/00207457209164756.

- Forsythe, Ian D. "Excitatory and inhibitory transmission in the superior olivary complex" (PDF).

- Rosenhall, U; Nordin, V; Sandstrom, M (1999). "Autism and hearing loss". J Autism Dev Disord. 29 (5): 349–357. doi:10.1023/A:1023022709710. PMID 10587881. S2CID 18700224.

- Kulesza Jr., Randy J.; Lukose, Richard; Stevens, Lisa Veith (2011). "Malformation of the human superior olive in autism spectrum disorders". Brain Research. 1367: 360–371. doi:10.1016/j.brainres.2010.10.015. PMID 20946889. S2CID 39753895.

- Kulesza, RJ; Mangunay, K (2008). "Morphological features of the medial superior olive in autism". Brain Res. 1200: 132–137. doi:10.1016/j.brainres.2008.01.009. PMID 18291353. S2CID 7388703.

External links

- Saberi, Kourosh; Farahbod, Haleh; Konishi, Masakazu (26 May 1998). "How do owls localize interaurally phase-ambiguous signals?". Proceedings of the National Academy of Sciences. 95 (11): 6465–6468. Bibcode:1998PNAS...95.6465S. doi:10.1073/pnas.95.11.6465. PMC 27804. PMID 9600989.

- Moncrieff, Deborah (2002-12-02). "Binaural Integration: An Overview". audiologyonline.com. Retrieved 2018-03-11.