Vulnerability (computing)

Vulnerabilities are flaws in a computer system that weaken the overall security of the device/system. Vulnerabilities can be weaknesses in either the hardware itself, or the software that runs on the hardware. Vulnerabilities can be exploited by a threat actor, such as an attacker, to cross privilege boundaries (i.e. perform unauthorized actions) within a computer system. To exploit a vulnerability, an attacker must have at least one applicable tool or technique that can connect to a system weakness. In this frame, vulnerabilities are also known as the attack surface.

| Part of a series on |

| Information security |

|---|

vectorial version |

| Related security categories |

|

| Threats |

|

| Defenses |

|

| Part of a series on |

| Computer hacking |

|---|

Vulnerability management is a cyclical practice that varies in theory but contains common processes which include: discover all assets, prioritize assets, assess or perform a complete vulnerability scan, report on results, remediate vulnerabilities, verify remediation - repeat. This practice generally refers to software vulnerabilities in computing systems.[1] Agile vulnerability management refers preventing attacks by identifying all vulnerabilities as quickly as possible.[2]

A security risk is often incorrectly classified as a vulnerability. The use of vulnerability with the same meaning of risk can lead to confusion. The risk is the potential of a significant impact resulting from the exploit of a vulnerability. Then there are vulnerabilities without risk: for example when the affected asset has no value. A vulnerability with one or more known instances of working and fully implemented attacks is classified as an exploitable vulnerability—a vulnerability for which an exploit exists. The window of vulnerability is the time from when the security hole was introduced or manifested in deployed software, to when access was removed, a security fix was available/deployed, or the attacker was disabled—see zero-day attack.

Security bug (security defect) is a narrower concept. There are vulnerabilities that are not related to software: hardware, site, personnel vulnerabilities are examples of vulnerabilities that are not software security bugs.

Constructs in programming languages that are difficult to use properly can manifest large numbers of vulnerabilities.

Definitions

ISO 27005 defines vulnerability as:[3]

- A weakness of an asset or group of assets that can be exploited by one or more threats, where an asset is anything that has value to the organization, its business operations, and their continuity, including information resources that support the organization's mission[4]

IETF RFC 4949 vulnerability as:[5]

- A flaw or weakness in a system's design, implementation, or operation and management that could be exploited to violate the system's security policy

The Committee on National Security Systems of United States of America defined vulnerability in CNSS Instruction No. 4009 dated 26 April 2010 National Information Assurance Glossary:[6]

- Vulnerability—Weakness in an information system, system security procedures, internal controls, or implementation that could be exploited by a threat source.

Many NIST publications define vulnerability in IT context in different publications: FISMApedia[7] term[8] provide a list. Between them SP 800-30,[9] give a broader one:

- A flaw or weakness in system security procedures, design, implementation, or internal controls that could be exercised (accidentally triggered or intentionally exploited) and result in a security breach or a violation of the system's security policy.

ENISA defines vulnerability in[10] as:

- The existence of a weakness, design, or implementation error that can lead to an unexpected, undesirable event [G.11] compromising the security of the computer system, network, application, or protocol involved.(ITSEC)

The Open Group defines vulnerability in[11] as

- The probability that threat capability exceeds the ability to resist the threat.

Factor Analysis of Information Risk (FAIR) defines vulnerability as:[12]

- The probability that an asset will be unable to resist the actions of a threat agent

According to FAIR vulnerability is related to Control Strength, i.e. the strength of control as compared to a standard measure of force and the threat Capabilities, i.e. the probable level of force that a threat agent is capable of applying against an asset.

ISACA defines vulnerability in Risk It framework as:

- A weakness in design, implementation, operation or internal control

Data and Computer Security: Dictionary of standards concepts and terms, authors Dennis Longley and Michael Shain, Stockton Press, ISBN 0-935859-17-9, defines vulnerability as:

- 1) In computer security, a weakness in automated systems security procedures, administrative controls, Internet controls, etc., that could be exploited by a threat to gain unauthorized access to information or to disrupt critical processing. 2) In computer security, a weakness in the physical layout, organization, procedures, personnel, management, administration, hardware or software that may be exploited to cause harm to the ADP system or activity. 3) In computer security, any weakness or flaw existing in a system. The attack or harmful event, or the opportunity available to a threat agent to mount that attack.

Matt Bishop and Dave Bailey[13] give the following definition of computer vulnerability:

- A computer system is composed of states describing the current configuration of the entities that make up the computer system. The system computes through the application of state transitions that change the state of the system. All states reachable from a given initial state using a set of state transitions fall into the class of authorized or unauthorized, as defined by a security policy. In this paper, the definitions of these classes and transitions is considered axiomatic. A vulnerable state is an authorized state from which an unauthorized state can be reached using authorized state transitions. A compromised state is the state so reached. An attack is a sequence of authorized state transitions which end in a compromised state. By definition, an attack begins in a vulnerable state. A vulnerability is a characterization of a vulnerable state which distinguishes it from all non-vulnerable states. If generic, the vulnerability may characterize many vulnerable states; if specific, it may characterize only one...

National Information Assurance Training and Education Center defines vulnerability:[14][15]

- A weakness in automated system security procedures, administrative controls, internal controls, and so forth, that could be exploited by a threat to gain unauthorized access to information or disrupt critical processing. 2. A weakness in system security procedures, hardware design, internal controls, etc. , which could be exploited to gain unauthorized access to classified or sensitive information. 3. A weakness in the physical layout, organization, procedures, personnel, management, administration, hardware, or software that may be exploited to cause harm to the ADP system or activity. The presence of a vulnerability does not in itself cause harm; a vulnerability is merely a condition or set of conditions that may allow the ADP system or activity to be harmed by an attack. 4. An assertion primarily concerning entities of the internal environment (assets); we say that an asset (or class of assets) is vulnerable (in some way, possibly involving an agent or collection of agents); we write: V(i,e) where: e may be an empty set. 5. Susceptibility to various threats. 6. A set of properties of a specific internal entity that, in union with a set of properties of a specific external entity, implies a risk. 7. The characteristics of a system which cause it to suffer a definite degradation (incapability to perform the designated mission) as a result of having been subjected to a certain level of effects in an unnatural (manmade) hostile environment.

Vulnerability and risk factor models

A resource (either physical or logical) may have one or more vulnerabilities that can be exploited by a threat actor. The result can potentially compromise the confidentiality, integrity or availability of resources (not necessarily the vulnerable one) belonging to an organization and/or other parties involved (customers, suppliers). The so-called CIA triad is a cornerstone of Information Security.

An attack can be active when it attempts to alter system resources or affect their operation, compromising integrity or availability. A "passive attack" attempts to learn or make use of information from the system but does not affect system resources, compromising confidentiality.[5]

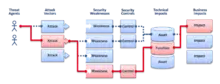

OWASP (see figure) depicts the same phenomenon in slightly different terms: a threat agent through an attack vector exploits a weakness (vulnerability) of the system and the related security controls, causing a technical impact on an IT resource (asset) connected to a business impact.

The overall picture represents the risk factors of the risk scenario.[16]

Information security management system

A set of policies concerned with the information security management system (ISMS), has been developed to manage, according to Risk management principles, the countermeasures to ensure a security strategy is set up following the rules and regulations applicable to a given organization. These countermeasures are also called Security controls, but when applied to the transmission of information, they are called security services.[17]

Classification

Vulnerabilities are classified according to the asset class they are related to:[3]

- hardware

- susceptibility to humidity or dust

- susceptibility to unprotected storage

- age-based wear that causes failure

- over-heating

- software

- insufficient testing

- insecure coding

- lack of audit trail

- design flaw

- network

- unprotected communication lines (e.g. lack of cryptography)

- insecure network architecture

- personnel

- inadequate recruiting process

- inadequate security awareness

- insider threat

- physical site

- area subject to natural disasters (e.g. flood, earthquake)

- interruption of power source

- organizational

- lack of regular audits

- lack of continuity plans

- lack of security

Causes

- Complexity: Large, complex systems increase the probability of flaws and unintended access points.[18]

- Familiarity: Using common, well-known code, software, operating systems, and/or hardware increases the probability an attacker has or can find the knowledge and tools to exploit the flaw.[19]

- Connectivity: More physical connections, privileges, ports, protocols, and services and time each of those are accessible increase vulnerability.[12]

- Password management flaws: The computer user uses weak passwords that could be discovered by brute force.[20] The computer user stores the password on the computer where a program can access it. Users re-use passwords between many programs and websites.[18]

- Fundamental operating system design flaws: The operating system designer chooses to enforce suboptimal policies on user/program management. For example, operating systems with policies such as default permit grant every program and every user full access to the entire computer.[18] This operating system flaw allows viruses and malware to execute commands on behalf of the administrator.[21]

- Internet Website Browsing: Some internet websites may contain harmful Spyware or Adware that can be installed automatically on the computer systems. After visiting those websites, the computer systems become infected and personal information will be collected and passed on to third party individuals.[22]

- Software bugs: The programmer leaves an exploitable bug in a software program. The software bug may allow an attacker to misuse an application.[18]

- Unchecked user input: The program assumes that all user input is safe. Programs that do not check user input can allow unintended direct execution of commands or SQL statements (known as Buffer overflows, SQL injection or other non-validated inputs).[18]

- Not learning from past mistakes:[23][24] for example most vulnerabilities discovered in IPv4 protocol software were discovered in the new IPv6 implementations.[25]

The research has shown that the most vulnerable point in most information systems is the human user, operator, designer, or other human:[26] so humans should be considered in their different roles as asset, threat, information resources. Social engineering is an increasing security concern.

Consequences

The impact of a security breach can be very high.[27] Most legislation sees the failure of IT managers to address IT systems and applications vulnerabilities if they are known to them as misconduct; IT managers have a responsibility to manage IT risk.[28] Privacy law forces managers to act to reduce the impact or likelihood of that security risk. Information technology security audit is a way to let other independent people certify that the IT environment is managed properly and lessen the responsibilities, at least having demonstrated the good faith. Penetration test is a form of verification of the weakness and countermeasures adopted by an organization: a White hat hacker tries to attack an organization's information technology assets, to find out how easy or difficult it is to compromise the IT security.[29] The proper way to professionally manage the IT risk is to adopt an Information Security Management System, such as ISO/IEC 27002 or Risk IT and follow them, according to the security strategy set forth by the upper management.[17]

One of the key concept of information security is the principle of defence in depth, i.e. to set up a multilayer defense system that can:[27]

- prevent the exploit

- detect and intercept the attack

- find out the threat agents and prosecute them

Intrusion detection system is an example of a class of systems used to detect attacks.

Physical security is a set of measures to physically protect an information asset: if somebody can get physical access to the information asset, it is widely accepted that an attacker can access any information on it or make the resource unavailable to its legitimate users.

Some sets of criteria to be satisfied by a computer, its operating system and applications in order to meet a good security level have been developed: ITSEC and Common criteria are two examples.

Vulnerability disclosure

Coordinated disclosure (some refer to it as 'responsible disclosure' but that is considered a biased term by others) of vulnerabilities is a topic of great debate. As reported by The Tech Herald in August 2010, "Google, Microsoft, TippingPoint, and Rapid7 have issued guidelines and statements addressing how they will deal with disclosure going forward."[30] The other method is typically full disclosure, when all the details of a vulnerability is publicized, sometimes with the intent to put pressure on the software author to publish a fix more quickly. In January 2014 when Google revealed a Microsoft vulnerability before Microsoft released a patch to fix it, a Microsoft representative called for coordinated practices among software companies in revealing disclosures.[31]

Vulnerability inventory

Mitre Corporation maintains an incomplete list of publicly disclosed vulnerabilities in a system called Common Vulnerabilities and Exposures. This information is immediately shared with the National Institute of Standards and Technology (NIST), where each vulnerability is given a risk score using Common Vulnerability Scoring System (CVSS), Common Platform Enumeration (CPE) scheme, and Common Weakness Enumeration.

Cloud service providers often don't list security issues in their services using the CVE system.[32] There is currently no universal standard for cloud computing vulnerability enumeration, severity assessment, and no unified tracking mechanism.[33] The Open CVDB initiative is a community-driven centralized cloud vulnerability database that catalogs CSP vulnerabilities, and lists the steps users can take to detect or prevent these issues in their own environments.[34]

OWASP maintains a list of vulnerability classes with the aim of educating system designers and programmers, therefore reducing the likelihood of vulnerabilities being written unintentionally into the software.[35]

Vulnerability disclosure date

The time of disclosure of a vulnerability is defined differently in the security community and industry. It is most commonly referred to as "a kind of public disclosure of security information by a certain party". Usually, vulnerability information is discussed on a mailing list or published on a security web site and results in a security advisory afterward.

The time of disclosure is the first date a security vulnerability is described on a channel where the disclosed information on the vulnerability has to fulfill the following requirement:

- The information is freely available to the public

- The vulnerability information is published by a trusted and independent channel/source

- The vulnerability has undergone analysis by experts such that risk rating information is included upon disclosure

- Identifying and removing vulnerabilities

Many software tools exist that can aid in the discovery (and sometimes removal) of vulnerabilities in a computer system. Though these tools can provide an auditor with a good overview of possible vulnerabilities present, they can not replace human judgment. Relying solely on scanners will yield false positives and a limited-scope view of the problems present in the system.

Vulnerabilities have been found in every major operating system [36] including Windows, macOS, various forms of Unix and Linux, OpenVMS, and others. The only way to reduce the chance of a vulnerability being used against a system is through constant vigilance, including careful system maintenance (e.g. applying software patches), best practices in deployment (e.g. the use of firewalls and access controls) and auditing (both during development and throughout the deployment lifecycle).

Locations in which vulnerabilities manifest

Vulnerabilities are related to and can manifest in:

- physical environment of the system

- the personnel (i.e. employees, management)

- administration procedures and security policy

- business operation and service delivery

- hardware including peripheral devices [37] [38]

- software (i.e. on premises or in cloud)

- connectivity (i.e. communication equipment and facilities)

It is evident that a pure technical approach cannot always protect physical assets: one should have administrative procedure to let maintenance personnel to enter the facilities and people with adequate knowledge of the procedures, motivated to follow it with proper care. However, technical protections do not necessarily stop Social engineering (security) attacks.

Examples of vulnerabilities:

- an attacker finds and uses a buffer overflow weakness to install malware to then exfiltrate sensitive data;

- an attacker convinces a user to open an email message with attached malware;

- a flood damages one's computer systems installed at ground floor.

Software vulnerabilities

Common types of software flaws that lead to vulnerabilities include:

- Memory safety violations, such as:

- Buffer overflows and over-reads

- Dangling pointers

- Input validation errors, such as:

- Code injection

- Cross-site scripting in web applications

- Directory traversal

- E-mail injection

- Format string attacks

- HTTP header injection

- HTTP response splitting

- SQL injection

- Privilege-confusion bugs, such as:

- Clickjacking

- Cross-site request forgery in web applications

- FTP bounce attack

- Privilege escalation

- Race conditions, such as:

- Symlink races

- Time-of-check-to-time-of-use bugs

- Side-channel attack

- Timing attack

- User interface failures, such as:

- Blaming the Victim prompting a user to make a security decision without giving the user enough information to answer it[39]

- Race Conditions[40][41]

- Warning fatigue[42] or user conditioning.

Some set of coding guidelines have been developed and a large number of static code analyzers has been used to verify that the code follows the guidelines.

See also

- Browser security

- Computer emergency response team

- Information security

- Internet security

- Mobile security

- Vulnerability scanner

- Responsible disclosure

- Full disclosure

References

- "Vulnerability Management Life Cycle | NPCR | CDC". www.cdc.gov. 2019-03-12. Retrieved 2020-07-04.

- Ding, Aaron Yi; De Jesus, Gianluca Limon; Janssen, Marijn (2019). "Ethical hacking for boosting IoT vulnerability management: a first look into bug bounty programs and responsible disclosure". Proceedings of the Eighth International Conference on Telecommunications and Remote Sensing - ICTRS '19. Ictrs '19. Rhodes, Greece: ACM Press: 49–55. arXiv:1909.11166. doi:10.1145/3357767.3357774. ISBN 978-1-4503-7669-3. S2CID 202676146.

- ISO/IEC, "Information technology -- Security techniques-Information security risk management" ISO/IEC FIDIS 27005:2008

- British Standard Institute, Information technology -- Security techniques -- Management of the information and communications technology security -- Part 1: Concepts and models for information and communications technology security management BS ISO/IEC 13335-1-2004

- Internet Engineering Task Force RFC 4949 Internet Security Glossary, Version 2

- "CNSS Instruction No. 4009" (PDF). 26 April 2010. Archived from the original (PDF) on 2013-06-28.

- "FISMApedia". fismapedia.org.

- "Term:Vulnerability". fismapedia.org.

- NIST SP 800-30 Risk Management Guide for Information Technology Systems

- "Glossary". europa.eu.

- Technical Standard Risk Taxonomy ISBN 1-931624-77-1 Document Number: C081 Published by The Open Group, January 2009.

- "An Introduction to Factor Analysis of Information Risk (FAIR)", Risk Management Insight LLC, November 2006 Archived 2014-11-18 at the Wayback Machine;

- Matt Bishop and Dave Bailey. A Critical Analysis of Vulnerability Taxonomies. Technical Report CSE-96-11, Department of Computer Science at the University of California at Davis, September 1996

- Schou, Corey (1996). Handbook of INFOSEC Terms, Version 2.0. CD-ROM (Idaho State University & Information Systems Security Organization)

- NIATEC Glossary

- ISACA THE RISK IT FRAMEWORK (registration required) Archived July 5, 2010, at the Wayback Machine

- Wright, Joe; Harmening, Jim (2009). "15". In Vacca, John (ed.). Computer and Information Security Handbook. Morgan Kaufmann Publications. Elsevier Inc. p. 257. ISBN 978-0-12-374354-1.

- Kakareka, Almantas (2009). "23". In Vacca, John (ed.). Computer and Information Security Handbook. Morgan Kaufmann Publications. Elsevier Inc. p. 393. ISBN 978-0-12-374354-1.

- Krsul, Ivan (April 15, 1997). "Technical Report CSD-TR-97-026". The COAST Laboratory Department of Computer Sciences, Purdue University. CiteSeerX 10.1.1.26.5435.

{{cite journal}}: Cite journal requires|journal=(help) - Pauli, Darren (16 January 2017). "Just give up: 123456 is still the world's most popular password". The Register. Retrieved 2017-01-17.

- "The Six Dumbest Ideas in Computer Security". ranum.com.

- "The Web Application Security Consortium / Web Application Security Statistics". webappsec.org.

- Ross Anderson. Why Cryptosystems Fail. Technical report, University Computer Laboratory, Cam- bridge, January 1994.

- Neil Schlager. When Technology Fails: Significant Technological Disasters, Accidents, and Failures of the Twentieth Century. Gale Research Inc., 1994.

- Hacking: The Art of Exploitation Second Edition

- Kiountouzis, E. A.; Kokolakis, S. A. (31 May 1996). Information systems security: facing the information society of the 21st century. London: Chapman & Hall, Ltd. ISBN 0-412-78120-4.

- Rasmussen, Jeremy (February 12, 2018). "Best Practices for Cybersecurity: Stay Cyber SMART". Tech Decisions. Retrieved September 18, 2020.

- "What is a vulnerability? - Knowledgebase - ICTEA". www.ictea.com. Retrieved 2021-04-03.

- Bavisi, Sanjay (2009). "22". In Vacca, John (ed.). Computer and Information Security Handbook. Morgan Kaufmann Publications. Elsevier Inc. p. 375. ISBN 978-0-12-374354-1.

- "The new era of vulnerability disclosure - a brief chat with HD Moore". The Tech Herald. Archived from the original on 2010-08-26. Retrieved 2010-08-24.

- Betz, Chris (11 Jan 2015). "A Call for Better Coordinated Vulnerability Disclosure - MSRC - Site Home - TechNet Blogs". blogs.technet.com. Retrieved 12 January 2015.

- "Wiz launches open database to track cloud vulnerabilities". SearchSecurity. Retrieved 2022-07-20.

- Barth, Bradley (2022-06-08). "Centralized database will help standardize bug disclosure for the cloud". www.scmagazine.com. Retrieved 2022-07-20.

- Writer, Jai VijayanContributing; ReadingJune 28, Dark; 2022 (2022-06-28). "New Vulnerability Database Catalogs Cloud Security Issues". Dark Reading. Retrieved 2022-07-20.

{{cite web}}: CS1 maint: numeric names: authors list (link) - "Category:Vulnerability". owasp.org.

- David Harley (10 March 2015). "Operating System Vulnerabilities, Exploits and Insecurity". Retrieved 15 January 2019.

- Most laptops vulnerable to attack via peripheral devices. http://www.sciencedaily.com/releases/2019/02/190225192119.htm Source: University of Cambridge]

- Exploiting Network Printers. Institute for IT-Security, Ruhr University Bochum

- Archived October 21, 2007, at the Wayback Machine

- "Jesse Ruderman » Race conditions in security dialogs". squarefree.com.

- "lcamtuf's blog". lcamtuf.blogspot.com. 16 August 2010.

- "Warning Fatigue". freedom-to-tinker.com.

External links

Media related to Vulnerability (computing) at Wikimedia Commons

Media related to Vulnerability (computing) at Wikimedia Commons- Security advisories links from the Open Directory http://dmoz-odp.org/Computers/Security/Advisories_and_Patches/