Stepwise regression is a method of regression modeling in which the choice of predictive variables is carried out by an automatic procedure. Usually, this takes the form of a sequence of

Stepwise Regression

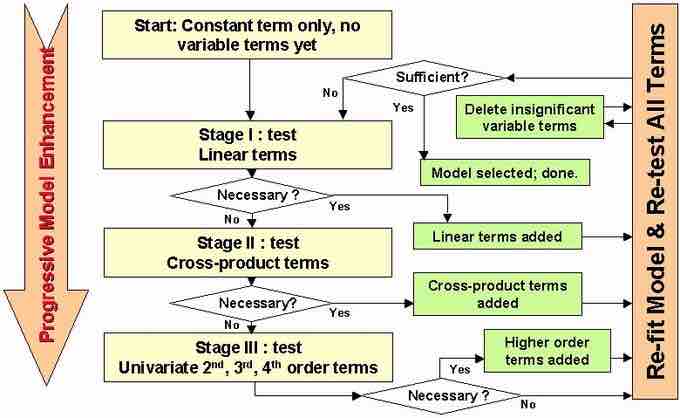

This is an example of stepwise regression from engineering, where necessity and sufficiency are usually determined by

Main Approaches

- Forward selection involves starting with no variables in the model, testing the addition of each variable using a chosen model comparison criterion, adding the variable (if any) that improves the model the most, and repeating this process until none improves the model.

- Backward elimination involves starting with all candidate variables, testing the deletion of each variable using a chosen model comparison criterion, deleting the variable (if any) that improves the model the most by being deleted, and repeating this process until no further improvement is possible.

- Bidirectional elimination, a combination of the above, tests at each step for variables to be included or excluded.

Another approach is to use an algorithm that provides an automatic procedure for statistical model selection in cases where there is a large number of potential explanatory variables and no underlying theory on which to base the model selection. This is a variation on forward selection, in which a new variable is added at each stage in the process, and a test is made to check if some variables can be deleted without appreciably increasing the residual sum of squares (RSS).

Selection Criterion

One of the main issues with stepwise regression is that it searches a large space of possible models. Hence it is prone to overfitting the data. In other words, stepwise regression will often fit much better in-sample than it does on new out-of-sample data. This problem can be mitigated if the criterion for adding (or deleting) a variable is stiff enough. The key line in the sand is at what can be thought of as the Bonferroni point: namely how significant the best spurious variable should be based on chance alone. Unfortunately, this means that many variables which actually carry signal will not be included.

Model Accuracy

A way to test for errors in models created by stepwise regression is to not rely on the model's

Criticism

Stepwise regression procedures are used in data mining, but are controversial. Several points of criticism have been made:

- The tests themselves are biased, since they are based on the same data.

- When estimating the degrees of freedom, the number of the candidate independent variables from the best fit selected is smaller than the total number of final model variables, causing the fit to appear better than it is when adjusting the

$r^2$ value for the number of degrees of freedom. It is important to consider how many degrees of freedom have been used in the entire model, not just count the number of independent variables in the resulting fit. - Models that are created may be too-small than the real models in the data.