Auditory cortex

The auditory cortex is the part of the temporal lobe that processes auditory information in humans and many other vertebrates. It is a part of the auditory system, performing basic and higher functions in hearing, such as possible relations to language switching.[1][2] It is located bilaterally, roughly at the upper sides of the temporal lobes – in humans, curving down and onto the medial surface, on the superior temporal plane, within the lateral sulcus and comprising parts of the transverse temporal gyri, and the superior temporal gyrus, including the planum polare and planum temporale (roughly Brodmann areas 41 and 42, and partially 22).[3][4]

| Auditory cortex | |

|---|---|

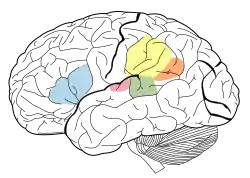

Brodmann areas 41 & 42 of the human brain, part of the auditory cortex | |

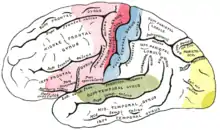

The auditory cortex is highlighted in pink and interacts with the other areas highlighted above | |

| Details | |

| Identifiers | |

| Latin | Cortex auditivus |

| MeSH | D001303 |

| NeuroNames | 1354 |

| FMA | 226221 |

| Anatomical terms of neuroanatomy | |

The auditory cortex takes part in the spectrotemporal, meaning involving time and frequency, analysis of the inputs passed on from the ear. The cortex then filters and passes on the information to the dual stream of speech processing.[5] The auditory cortex's function may help explain why particular brain damage leads to particular outcomes. For example, unilateral destruction, in a region of the auditory pathway above the cochlear nucleus, results in slight hearing loss, whereas bilateral destruction results in cortical deafness.

Structure

The auditory cortex was previously subdivided into primary (A1) and secondary (A2) projection areas and further association areas. The modern divisions of the auditory cortex are the core (which includes primary auditory cortex, A1), the belt (secondary auditory cortex, A2), and the parabelt (tertiary auditory cortex, A3). The belt is the area immediately surrounding the core; the parabelt is adjacent to the lateral side of the belt.[6]

Besides receiving input from the ears via lower parts of the auditory system, it also transmits signals back to these areas and is interconnected with other parts of the cerebral cortex. Within the core (A1), its structure preserves tonotopy, the orderly representation of frequency, due to its ability to map low to high frequencies corresponding to the apex and base, respectively, of the cochlea.

Data about the auditory cortex has been obtained through studies in rodents, cats, macaques, and other animals. In humans, the structure and function of the auditory cortex has been studied using functional magnetic resonance imaging (fMRI), electroencephalography (EEG), and electrocorticography.[7][8]

Development

Like many areas in the neocortex, the functional properties of the adult primary auditory cortex (A1) are highly dependent on the sounds encountered early in life. This has been best studied using animal models, especially cats and rats. In the rat, exposure to a single frequency during postnatal day (P) 11 to 13 can cause a 2-fold expansion in the representation of that frequency in A1.[9] Importantly, the change is persistent, in that it lasts throughout the animal's life, and specific, in that the same exposure outside of that period causes no lasting change in the tonotopy of A1. Sexual dimorphism within the auditory cortex can be seen in humans between males in females through the planum temporale, encompassing Wernicke's region, for the planum temporale within males has been observed to have a larger planum temporale volume on average, reflecting previous studies discussing interactions between sex hormones and asymmetrical brain development.[10]

Function

As with other primary sensory cortical areas, auditory sensations reach perception only if received and processed by a cortical area. Evidence for this comes from lesion studies in human patients who have sustained damage to cortical areas through tumors or strokes,[11] or from animal experiments in which cortical areas were deactivated by surgical lesions or other methods.[12] Damage to the auditory cortex in humans leads to a loss of any awareness of sound, but an ability to react reflexively to sounds remains as there is a great deal of subcortical processing in the auditory brainstem and midbrain.[13][14][15]

Neurons in the auditory cortex are organized according to the frequency of sound to which they respond best. Neurons at one end of the auditory cortex respond best to low frequencies; neurons at the other respond best to high frequencies. There are multiple auditory areas (much like the multiple areas in the visual cortex), which can be distinguished anatomically and on the basis that they contain a complete "frequency map." The purpose of this frequency map (known as a tonotopic map) likely reflects the fact that the cochlea is arranged according to sound frequency. The auditory cortex is involved in tasks such as identifying and segregating "auditory objects" and identifying the location of a sound in space. For example, it has been shown that A1 encodes complex and abstract aspects of auditory stimuli without encoding their "raw" aspects like frequency content, presence of a distinct sound or its echoes.[16]

Human brain scans indicated that a peripheral part of this brain region is active when trying to identify musical pitch. Individual cells consistently get excited by sounds at specific frequencies, or multiples of that frequency.

The auditory cortex plays an important yet ambiguous role in hearing. When the auditory information passes into the cortex, the specifics of what exactly takes place are unclear. There is a large degree of individual variation in the auditory cortex, as noted by English biologist James Beament, who wrote, "The cortex is so complex that the most we may ever hope for is to understand it in principle, since the evidence we already have suggests that no two cortices work in precisely the same way."[17]

In the hearing process, multiple sounds are transduced simultaneously. The role of the auditory system is to decide which components form the sound link. Many have surmised that this linkage is based on the location of sounds. However, there are numerous distortions of sound when reflected off different media, which makes this thinking unlikely. The auditory cortex forms groupings based on fundamentals; in music, for example, this would include harmony, timing, and pitch.[18]

The primary auditory cortex lies in the superior temporal gyrus of the temporal lobe and extends into the lateral sulcus and the transverse temporal gyri (also called Heschl's gyri). Final sound processing is then performed by the parietal and frontal lobes of the human cerebral cortex. Animal studies indicate that auditory fields of the cerebral cortex receive ascending input from the auditory thalamus and that they are interconnected on the same and on the opposite cerebral hemispheres.

The auditory cortex is composed of fields that differ from each other in both structure and function.[19] The number of fields varies in different species, from as few as 2 in rodents to as many as 15 in the rhesus monkey. The number, location, and organization of fields in the human auditory cortex are not known at this time. What is known about the human auditory cortex comes from a base of knowledge gained from studies in mammals, including primates, used to interpret electrophysiological tests and functional imaging studies of the brain in humans.

When each instrument of a symphony orchestra or jazz band plays the same note, the quality of each sound is different, but the musician perceives each note as having the same pitch. The neurons of the auditory cortex of the brain are able to respond to pitch. Studies in the marmoset monkey have shown that pitch-selective neurons are located in a cortical region near the anterolateral border of the primary auditory cortex. This location of a pitch-selective area has also been identified in recent functional imaging studies in humans.[20][21]

The primary auditory cortex is subject to modulation by numerous neurotransmitters, including norepinephrine, which has been shown to decrease cellular excitability in all layers of the temporal cortex. alpha-1 adrenergic receptor activation, by norepinephrine, decreases glutamatergic excitatory postsynaptic potentials at AMPA receptors.[22]

Relationship to the auditory system

The auditory cortex is the most highly organized processing unit of sound in the brain. This cortex area is the neural crux of hearing, and—in humans—language and music. The auditory cortex is divided into three separate parts: the primary, secondary, and tertiary auditory cortex. These structures are formed concentrically around one another, with the primary cortex in the middle and the tertiary cortex on the outside.

The primary auditory cortex is tonotopically organized, which means that neighboring cells in the cortex respond to neighboring frequencies.[23] Tonotopic mapping is preserved throughout most of the audition circuit. The primary auditory cortex receives direct input from the medial geniculate nucleus of the thalamus and thus is thought to identify the fundamental elements of music, such as pitch and loudness.

An evoked response study of congenitally deaf kittens used local field potentials to measure cortical plasticity in the auditory cortex. These kittens were stimulated and measured against a control (an un-stimulated congenitally deaf cat (CDC)) and normal hearing cats. The field potentials measured for artificially stimulated CDC were eventually much stronger than that of a normal hearing cat.[24] This finding accords with a study by Eckart Altenmuller, in which it was observed that students who received musical instruction had greater cortical activation than those who did not.[25]

The auditory cortex has distinct responses to sounds in the gamma band. When subjects are exposed to three or four cycles of a 40 hertz click, an abnormal spike appears in the EEG data, which is not present for other stimuli. The spike in neuronal activity correlating to this frequency is not restrained to the tonotopic organization of the auditory cortex. It has been theorized that gamma frequencies are resonant frequencies of certain areas of the brain and appear to affect the visual cortex as well.[26] Gamma band activation (25 to 100 Hz) has been shown to be present during the perception of sensory events and the process of recognition. In a 2000 study by Kneif and colleagues, subjects were presented with eight musical notes to well-known tunes, such as Yankee Doodle and Frère Jacques. Randomly, the sixth and seventh notes were omitted and an electroencephalogram, as well as a magnetoencephalogram were each employed to measure the neural results. Specifically, the presence of gamma waves, induced by the auditory task at hand, were measured from the temples of the subjects. The omitted stimulus response (OSR)[27] was located in a slightly different position; 7 mm more anterior, 13 mm more medial and 13 mm more superior in respect to the complete sets. The OSR recordings were also characteristically lower in gamma waves as compared to the complete musical set. The evoked responses during the sixth and seventh omitted notes are assumed to be imagined, and were characteristically different, especially in the right hemisphere.[28] The right auditory cortex has long been shown to be more sensitive to tonality (high spectral resolution), while the left auditory cortex has been shown to be more sensitive to minute sequential differences (rapid temporal changes) in sound, such as in speech.[29]

Tonality is represented in more places than just the auditory cortex; one other specific area is the rostromedial prefrontal cortex (RMPFC).[30] A study explored the areas of the brain which were active during tonality processing, using fMRI. The results of this experiment showed preferential blood-oxygen-level-dependent activation of specific voxels in RMPFC for specific tonal arrangements. Though these collections of voxels do not represent the same tonal arrangements between subjects or within subjects over multiple trials, it is interesting and informative that RMPFC, an area not usually associated with audition, seems to code for immediate tonal arrangements in this respect. RMPFC is a subsection of the medial prefrontal cortex, which projects to many diverse areas including the amygdala, and is thought to aid in the inhibition of negative emotion.[31]

Another study has suggested that people who experience 'chills' while listening to music have a higher volume of fibres connecting their auditory cortex to areas associated with emotional processing.[32]

In a study involving dichotic listening to speech, in which one message is presented to the right ear and another to the left, it was found that the participants chose letters with stops (e.g. 'p', 't', 'k', 'b') far more often when presented to the right ear than the left. However, when presented with phonemic sounds of longer duration, such as vowels, the participants did not favor any particular ear.[33] Due to the contralateral nature of the auditory system, the right ear is connected to Wernicke's area, located within the posterior section of the superior temporal gyrus in the left cerebral hemisphere.

Sounds entering the auditory cortex are treated differently depending on whether or not they register as speech. When people listen to speech, according to the strong and weak speech mode hypotheses, they, respectively, engage perceptual mechanisms unique to speech or engage their knowledge of language as a whole.

See also

- Auditory system

- Neuronal encoding of sound

- Noise health effects

References

- Cf. Pickles, James O. (2012). An Introduction to the Physiology of Hearing (4th ed.). Bingley, UK: Emerald Group Publishing Limited, p. 238.

- Blanco-Elorrieta, Esti; Liina, Pylkkanen (2017-08-16). "Bilingual language switching in the lab vs. in the wild: The Spatio-temporal dynamics of adaptive language control". Journal of Neuroscience. 37 (37): 9022–9036. doi:10.1523/JNEUROSCI.0553-17.2017. PMC 5597983. PMID 28821648.

- Cf. Pickles, James O. (2012). An Introduction to the Physiology of Hearing (4th ed.). Bingley, UK: Emerald Group Publishing Limited, pp. 215–217.

- Nakai, Y; Jeong, JW; Brown, EC; Rothermel, R; Kojima, K; Kambara, T; Shah, A; Mittal, S; Sood, S; Asano, E (2017). "Three- and four-dimensional mapping of speech and language in patients with epilepsy". Brain. 140 (5): 1351–1370. doi:10.1093/brain/awx051. PMC 5405238. PMID 28334963.

- Hickok, Gregory; Poeppel, David (May 2007). "The cortical organization of speech processing". Nature Reviews Neuroscience. 8 (5): 393–402. doi:10.1038/nrn2113. ISSN 1471-0048. PMID 17431404. S2CID 6199399.

- Cf. Pickles, James O. (2012). An Introduction to the Physiology of Hearing (4th ed.). Bingley, UK: Emerald Group Publishing Limited, p. 211 f.

- Moerel, Michelle; De Martino, Federico; Formisano, Elia (29 July 2014). "An anatomical and functional topography of human auditory cortical areas". Frontiers in Neuroscience. 8: 225. doi:10.3389/fnins.2014.00225. PMC 4114190. PMID 25120426.

- Rauschecker, Josef P; Scott, Sophie K (26 May 2009). "Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing". Nature Neuroscience. 12 (6): 718–724. doi:10.1038/nn.2331. PMC 2846110. PMID 19471271.

- de Villers-Sidani, Etienne; EF Chang; S Bao; MM Merzenich (2007). "Critical period window for spectral tuning defined in the primary auditory cortex (A1) in the rat" (PDF). J Neurosci. 27 (1): 180–9. doi:10.1523/JNEUROSCI.3227-06.2007. PMC 6672294. PMID 17202485.

- Kulynych, J. J.; Vladar, K.; Jones, D. W.; Weinberger, D. R. (March 1994). "Gender differences in the normal lateralization of the supratemporal cortex: MRI surface-rendering morphometry of Heschl's gyrus and the planum temporale". Cerebral Cortex. 4 (2): 107–118. doi:10.1093/cercor/4.2.107. ISSN 1047-3211. PMID 8038562.

- Cavinato, M.; Rigon, J.; Volpato, C.; Semenza, C.; Piccione, F. (January 2012). "Preservation of Auditory P300-Like Potentials in Cortical Deafness". PLOS ONE. 7 (1): e29909. Bibcode:2012PLoSO...729909C. doi:10.1371/journal.pone.0029909. PMC 3260175. PMID 22272260.

- Heffner, H.E.; Heffner, R.S. (February 1986). "Hearing loss in Japanese macaques following bilateral auditory cortex lesions" (PDF). Journal of Neurophysiology. 55 (2): 256–271. doi:10.1152/jn.1986.55.2.256. PMID 3950690. Archived from the original (PDF) on 2 August 2010. Retrieved 11 September 2012.

- Rebuschat, P.; Martin Rohrmeier, M.; Hawkins, J.A.; Cross, I. (2011). Human subcortical auditory function provides a new conceptual framework for considering modularity. Language and Music as Cognitive Systems. pp. 269–282. doi:10.1093/acprof:oso/9780199553426.003.0028. ISBN 9780199553426.

- Krizman, J.; Skoe, E.; Kraus, N. (March 2010). "Stimulus Rate and Subcortical Auditory Processing of Speech" (PDF). Audiology and Neurotology. 15 (5): 332–342. doi:10.1159/000289572. PMC 2919427. PMID 20215743. Archived from the original (PDF) on 15 April 2012. Retrieved 11 September 2012.

- Strait, D.L.; Kraus, N.; Skoe, E.; Ashley, R. (2009). "Musical Experience Promotes Subcortical Efficiency in Processing Emotional Vocal Sounds" (PDF). Annals of the New York Academy of Sciences. 1169 (1): 209–213. Bibcode:2009NYASA1169..209S. doi:10.1111/j.1749-6632.2009.04864.x. PMID 19673783. S2CID 4845922. Archived from the original (PDF) on 15 April 2012. Retrieved 11 September 2012.

- Chechik, Gal; Nelken, Israel (2012-11-13). "Auditory abstraction from spectro-temporal features to coding auditory entities". Proceedings of the National Academy of Sciences of the United States of America. 109 (46): 18968–18973. Bibcode:2012PNAS..10918968C. doi:10.1073/pnas.1111242109. ISSN 0027-8424. PMC 3503225. PMID 23112145.

- Beament, James (2001). How We Hear Music: the Relationship Between Music and the Hearing Mechanism. Woodbridge: Boydell Press. p. 93. ISBN 9780851158136. JSTOR 10.7722/j.ctt1f89rq1.

- Deutsch, Diana (February 2010). "Hearing Music in Ensembles". Physics Today. Vol. 63, no. 2. p. 40. doi:10.1063/1.3326988.

- Cant, NB; Benson, CG (June 15, 2003). "Parallel auditory pathways: projection patterns of the different neuronal populations in the dorsal and ventral cochlear nuclei". Brain Res Bull. 60 (5–6): 457–74. doi:10.1016/S0361-9230(03)00050-9. PMID 12787867. S2CID 42563918.

- Bendor, D; Wang, X (2005). "The neuronal representation of pitch in primate auditory cortex". Nature. 436 (7054): 1161–5. Bibcode:2005Natur.436.1161B. doi:10.1038/nature03867. PMC 1780171. PMID 16121182.

- Zatorre, RJ (2005). "Neuroscience: finding the missing fundamental". Nature. 436 (7054): 1093–4. Bibcode:2005Natur.436.1093Z. doi:10.1038/4361093a. PMID 16121160. S2CID 4429583.

- Dinh, L; Nguyen T; Salgado H; Atzori M (2009). "Norepinephrine homogeneously inhibits alpha-amino-3-hydroxyl-5-methyl-4-isoxazole-propionate- (AMPAR-) mediated currents in all layers of the temporal cortex of the rat". Neurochem Res. 34 (11): 1896–906. doi:10.1007/s11064-009-9966-z. PMID 19357950. S2CID 25255160.

- Lauter, Judith L; P Herscovitch; C Formby; ME Raichle (1985). "Tonotopic organization in human auditory cortex revealed by positron emission tomography". Hearing Research. 20 (3): 199–205. doi:10.1016/0378-5955(85)90024-3. PMID 3878839. S2CID 45928728.

- Klinke, Rainer; Kral, Andrej; Heid, Silvia; Tillein, Jochen; Hartmann, Rainer (September 10, 1999). "Recruitment of the auditory cortex in congenitally deaf cats by long-term cochlear electrostimulation". Science. 285 (5434): 1729–33. doi:10.1126/science.285.5434.1729. PMID 10481008. S2CID 38985173.

- Strickland (Winter 2001). "Music and the brain in childhood development". Childhood Education. 78 (2): 100–4. doi:10.1080/00094056.2002.10522714. S2CID 219597861.

- Tallon-Baudry, C.; Bertrand, O. (April 1999). "Oscillatory gamma activity in humans and its role in object representation". Trends in Cognitive Sciences. 3 (4): 151–162. doi:10.1016/S1364-6613(99)01299-1. PMID 10322469. S2CID 1308261.

- Busse, L; Woldorff, M (April 2003). "The ERP omitted stimulus response to "no-stim" events and its implications for fast-rate event-related fMRI designs". NeuroImage. 18 (4): 856–864. doi:10.1016/s1053-8119(03)00012-0. PMID 12725762. S2CID 25351923.

- Knief, A.; Schulte, M.; Fujiki, N.; Pantev, C. "Oscillatory Gamma band and Slow brain Activity Evoked by Real and Imaginary Musical Stimuli". S2CID 17442976.

{{cite journal}}: Cite journal requires|journal=(help) - Arianna LaCroix, Alvaro F. Diaz, and Corianne Rogalsky (2015). "The relationship between the neural computations for speech and music perception is context-dependent: an activation likelihood estimate study". Frontiers in Psychology. 6 (1138): 18. ISBN 9782889199112.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - Janata, P.; Birk, J.L.; Van Horn, J.D.; Leman, M.; Tillmann, B.; Bharucha, J.J. (December 2002). "The Cortical Topography of Tonal Structures Underlying Western Music" (PDF). Science. 298 (5601): 2167–2170. Bibcode:2002Sci...298.2167J. doi:10.1126/science.1076262. PMID 12481131. S2CID 3031759. Retrieved 11 September 2012.

- Cassel, M. D.; Wright, D. J. (September 1986). "Topography of projections from the medial prefrontal cortex to the amygdala in the rat". Brain Research Bulletin. 17 (3): 321–333. doi:10.1016/0361-9230(86)90237-6. PMID 2429740. S2CID 22826730.

- Sachs, Matthew E.; Ellis, Robert J.; Schlaug Gottfried, Louie Psyche (2016). "Brain connectivity reflects human aesthetic responses to music". Social Cognitive and Affective Neuroscience. 11 (6): 884–891. doi:10.1093/scan/nsw009. PMC 4884308. PMID 26966157.

- Jerger, James; Martin, Jeffrey (2004-12-01). "Hemispheric asymmetry of the right ear advantage in dichotic listening". Hearing Research. 198 (1): 125–136. doi:10.1016/j.heares.2004.07.019. ISSN 0378-5955. PMID 15567609. S2CID 2504300.