Approximation error

The approximation error in a data value is the discrepancy between an exact value and some approximation to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute error divided by the data value).

An approximation error can occur because of computing machine precision or measurement error (e.g. the length of a piece of paper is 4.53 cm but the ruler only allows you to estimate it to the nearest 0.1 cm, so you measure it as 4.5 cm).

In the mathematical field of numerical analysis, the numerical stability of an algorithm indicates how the error is propagated by the algorithm.

Formal definition

One commonly distinguishes between the relative error and the absolute error.

Given some value v and its approximation vapprox, the absolute error is

where the vertical bars denote the absolute value. If the relative error is

and the percent error (an expression of the relative error) is

In words, the absolute error is the magnitude of the difference between the exact value and the approximation. The relative error is the absolute error divided by the magnitude of the exact value.

An error bound is an upper limit on the relative or absolute size of an approximation error.

Generalizations

These definitions can be extended to the case when and are n-dimensional vectors, by replacing the absolute value with an n-norm.[1]

Examples

As an example, if the exact value is 50 and the approximation is 49.9, then the absolute error is 0.1 and the relative error is 0.1/50 = 0.002 = 0.2%. Another example would be if, in measuring a 6 mL beaker, the value read was 5 mL. The correct reading being 6 mL, this means the percent error in that particular situation is, rounded, 16.7%.

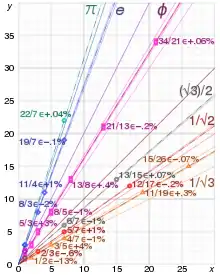

The relative error is often used to compare approximations of numbers of widely differing size; for example, approximating the number 1,000 with an absolute error of 3 is, in most applications, much worse than approximating the number 1,000,000 with an absolute error of 3; in the first case the relative error is 0.003 and in the second it is only 0.000003.

There are two features of relative error that should be kept in mind. Firstly, relative error is undefined when the true value is zero as it appears in the denominator (see below). Secondly, relative error only makes sense when measured on a ratio scale, (i.e. a scale which has a true meaningful zero), otherwise it would be sensitive to the measurement units. For example, when an absolute error in a temperature measurement given in Celsius scale is 1 °C, and the true value is 2 °C, the relative error is 0.5, and the percent error is 50%. For this same case, when the temperature is given in Kelvin scale, the same 1 K absolute error with the same true value of 275.15 K gives a relative error of 3.63×10−3 and a percent error of only 0.363%. Celsius temperature is measured on an interval scale, whereas the Kelvin scale has a true zero and so is a ratio scale. Thus the relative error is not very meaningful.

Instruments

In most indicating instruments, the accuracy is guaranteed to a certain percentage of full-scale reading. The limits of these deviations from the specified values are known as limiting errors or guarantee errors.[2]

See also

- Accepted and experimental value

- Condition number

- Errors and residuals in statistics

- Experimental uncertainty analysis

- Machine epsilon

- Measurement error

- Measurement uncertainty

- Propagation of uncertainty

- Quantization error

- Relative difference

- Round-off error

- Uncertainty

References

External links

- Weisstein, Eric W. "Percentage error". MathWorld.