Stable distribution

In probability theory, a distribution is said to be stable if a linear combination of two independent random variables with this distribution has the same distribution, up to location and scale parameters. A random variable is said to be stable if its distribution is stable. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it.[1][2]

|

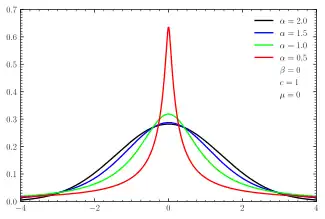

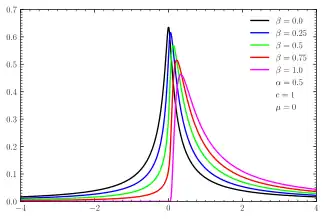

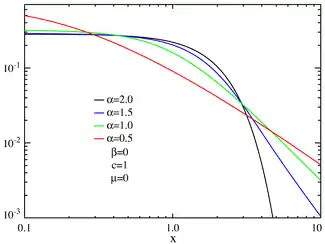

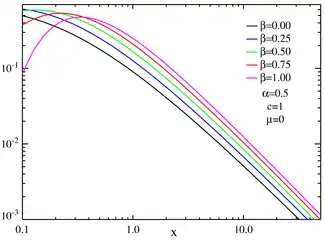

Probability density function  Symmetric -stable distributions with unit scale factor  Skewed centered stable distributions with unit scale factor | |||

|

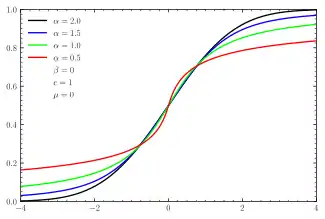

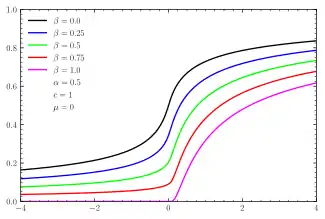

Cumulative distribution function  CDFs for symmetric -stable distributions  CDFs for skewed centered stable distributions | |||

| Parameters |

— stability parameter | ||

|---|---|---|---|

| Support |

x ∈ [μ, +∞) if and x ∈ (-∞, μ] if and x ∈ R otherwise | ||

| not analytically expressible, except for some parameter values | |||

| CDF | not analytically expressible, except for certain parameter values | ||

| Mean | μ when , otherwise undefined | ||

| Median | μ when , otherwise not analytically expressible | ||

| Mode | μ when , otherwise not analytically expressible | ||

| Variance | 2c2 when , otherwise infinite | ||

| Skewness | 0 when , otherwise undefined | ||

| Ex. kurtosis | 0 when , otherwise undefined | ||

| Entropy | not analytically expressible, except for certain parameter values | ||

| MGF | when , otherwise undefined | ||

| CF |

| ||

Of the four parameters defining the family, most attention has been focused on the stability parameter, (see panel). Stable distributions have , with the upper bound corresponding to the normal distribution, and to the Cauchy distribution. The distributions have undefined variance for , and undefined mean for . The importance of stable probability distributions is that they are "attractors" for properly normed sums of independent and identically distributed (iid) random variables. The normal distribution defines a family of stable distributions. By the classical central limit theorem the properly normed sum of a set of random variables, each with finite variance, will tend toward a normal distribution as the number of variables increases. Without the finite variance assumption, the limit may be a stable distribution that is not normal. Mandelbrot referred to such distributions as "stable Paretian distributions",[3][4][5] after Vilfredo Pareto. In particular, he referred to those maximally skewed in the positive direction with as "Pareto–Lévy distributions",[1] which he regarded as better descriptions of stock and commodity prices than normal distributions.[6]

Definition

A non-degenerate distribution is a stable distribution if it satisfies the following property:

Since the normal distribution, the Cauchy distribution, and the Lévy distribution all have the above property, it follows that they are special cases of stable distributions.

Such distributions form a four-parameter family of continuous probability distributions parametrized by location and scale parameters μ and c, respectively, and two shape parameters and , roughly corresponding to measures of asymmetry and concentration, respectively (see the figures).

The characteristic function of any probability distribution is the Fourier transform of its probability density function . The density function is therefore the inverse Fourier transform of the characteristic function:[8]

Although the probability density function for a general stable distribution cannot be written analytically, the general characteristic function can be expressed analytically. A random variable X is called stable if its characteristic function can be written as[7][9]

where sgn(t) is just the sign of t and

μ ∈ R is a shift parameter, , called the skewness parameter, is a measure of asymmetry. Notice that in this context the usual skewness is not well defined, as for the distribution does not admit 2nd or higher moments, and the usual skewness definition is the 3rd central moment.

The reason this gives a stable distribution is that the characteristic function for the sum of two independent random variables equals the product of the two corresponding characteristic functions. Adding two random variables from a stable distribution gives something with the same values of and , but possibly different values of μ and c.

Not every function is the characteristic function of a legitimate probability distribution (that is, one whose cumulative distribution function is real and goes from 0 to 1 without decreasing), but the characteristic functions given above will be legitimate so long as the parameters are in their ranges. The value of the characteristic function at some value t is the complex conjugate of its value at −t as it should be so that the probability distribution function will be real.

In the simplest case , the characteristic function is just a stretched exponential function; the distribution is symmetric about μ and is referred to as a (Lévy) symmetric alpha-stable distribution, often abbreviated SαS.

When and , the distribution is supported by [μ, ∞).

The parameter c > 0 is a scale factor which is a measure of the width of the distribution while is the exponent or index of the distribution and specifies the asymptotic behavior of the distribution.

Parametrizations

The above definition is only one of the parametrizations in use for stable distributions; it is the most common but its probability density is not continuous in the parameters at .[10]

A continuous parametrization is[7]

where:

The ranges of and are the same as before, γ (like c) should be positive, and δ (like μ) should be real.

In either parametrization one can make a linear transformation of the random variable to get a random variable whose density is . In the first parametrization, this is done by defining the new variable:

For the second parametrization, we simply use

no matter what is. In the first parametrization, if the mean exists (that is, ) then it is equal to μ, whereas in the second parametrization when the mean exists it is equal to

The distribution

A stable distribution is therefore specified by the above four parameters. It can be shown that any non-degenerate stable distribution has a smooth (infinitely differentiable) density function.[7] If denotes the density of X and Y is the sum of independent copies of X:

then Y has the density with

The asymptotic behavior is described, for , by:[7]

where Γ is the Gamma function (except that when and , the tail does not vanish to the left or right, resp., of μ, although the above expression is 0). This "heavy tail" behavior causes the variance of stable distributions to be infinite for all . This property is illustrated in the log–log plots below.

When , the distribution is Gaussian (see below), with tails asymptotic to exp(−x2/4c2)/(2c√π).

One-sided stable distribution and stable count distribution

When and , the distribution is supported by [μ, ∞). This family is called one-sided stable distribution.[11] Its standard distribution (μ=0) is defined as

- , where .

Let , its characteristic function is . Thus the integral form of its PDF is (note: )

The double-sine integral is more effective for very small .

Consider the Lévy sum where , then Y has the density where . Set , we arrive at the stable count distribution.[12] Its standard distribution is defined as

- , where and .

The stable count distribution is the conjugate prior of the one-sided stable distribution. Its location-scale family is defined as

- , where , , and .

It is also a one-sided distribution supported by . The location parameter is the cut-off location, while defines its scale.

When , is the Lévy distribution which is an inverse gamma distribution. Thus is a shifted gamma distribution of shape 3/2 and scale ,

- , where , .

Its mean is and its standard deviation is . It is hypothesized that VIX is distributed like with and (See Section 7 of [12]). Thus the stable count distribution is the first-order marginal distribution of a volatility process. In this context, is called the "floor volatility".

Another approach to derive the stable count distribution is to use the Laplace transform of the one-sided stable distribution, (Section 2.4 of [12])

- , where .

Let , and one can decompose the integral on the left hand side as a product distribution of a standard Laplace distribution and a standard stable count distribution,f

- , where .

This is called the "lambda decomposition" (See Section 4 of [12]) since the right hand side was named as "symmetric lambda distribution" in Lihn's former works. However, it has several more popular names such as "exponential power distribution", or the "generalized error/normal distribution", often referred to when .

The n-th moment of is the -th moment of , All positive moments are finite. This in a way solves the thorny issue of diverging moments in the stable distribution.

Properties

- All stable distributions are infinitely divisible.

- With the exception of the normal distribution (), stable distributions are leptokurtotic and heavy-tailed distributions.

- Closure under convolution

Stable distributions are closed under convolution for a fixed value of . Since convolution is equivalent to multiplication of the Fourier-transformed function, it follows that the product of two stable characteristic functions with the same will yield another such characteristic function. The product of two stable characteristic functions is given by:

Since Φ is not a function of the μ, c or variables it follows that these parameters for the convolved function are given by:

In each case, it can be shown that the resulting parameters lie within the required intervals for a stable distribution.

The Generalized Central Limit Theorem

The Generalized Central Limit Theorem (GCLT) was an effort of multiple mathematicians (Berstein, Lindeberg, Lévy, Feller, Kolmogorov, and others) over the period from 1920 to 1937. [13] The first published complete proof (in French) of the GCLT was in 1937 by Paul Lévy.[14] An English language version of the complete proof of the GCLT is available in the translation of Gnedenko and Kolmogorov's 1954 book.[15]

The statement of the GLCT is as follows:[16]

- A non-degenerate random variable Z is α-stable for some 0 < α ≤ 2 if and only if there is an independent, identically distributed sequence of random variables X1, X2, X3, ... and constants an > 0, bn ∈ ℝ with

- an (X1 + ... + Xn) - bn → Z.

- Here → means the sequence of random variable sums converges in distribution; i.e., the corresponding distributions satisfy Fn(y) → F(y) at all continuity points of F.

In other words, if sums of independent, identically distributed random variables converge in distribution to some Z, then Z must be a stable distribution.

A generalized central limit theorem

General reference: [17] by Gnedenko.

Another important property of stable distributions is the role that they play in a generalized central limit theorem. The central limit theorem states that the sum of a number of independent and identically distributed (i.i.d.) random variables with finite non-zero variances will tend to a normal distribution as the number of variables grows.

A generalization due to Gnedenko and Kolmogorov states that the sum of a number of random variables with symmetric distributions having power-law tails (Paretian tails), decreasing as where (and therefore having infinite variance), will tend to a stable distribution as the number of summands grows.[18] If then the sum converges to a stable distribution with stability parameter equal to 2, i.e. a Gaussian distribution.[19]

There are other possibilities as well. For example, if the characteristic function of the random variable is asymptotic to for small t (positive or negative), then we may ask how t varies with n when the value of the characteristic function for the sum of n such random variables equals a given value u:

Assuming for the moment that t → 0, we take the limit of the above as n → ∞:

Therefore:

This shows that is asymptotic to so using the previous equation we have

This implies that the sum divided by

has a characteristic function whose value at some t′ goes to u (as n increases) when In other words, the characteristic function converges pointwise to and therefore by Lévy's continuity theorem the sum divided by

converges in distribution to the symmetric alpha-stable distribution with stability parameter and scale parameter 1.

This can be applied to a random variable whose tails decrease as . This random variable has a mean but the variance is infinite. Let us take the following distribution:

We can write this as

where

We want to find the leading terms of the asymptotic expansion of the characteristic function. The characteristic function of the probability distribution is so the characteristic function for f(x) is

and we can calculate:

where and are constants. Therefore,

and according to what was said above (and the fact that the variance of f(x;2,0,1,0) is 2), the sum of n instances of this random variable, divided by will converge in distribution to a Gaussian distribution with variance 1. But the variance at any particular n will still be infinite. Note that the width of the limiting distribution grows faster than in the case where the random variable has a finite variance (in which case the width grows as the square root of n). The average, obtained by dividing the sum by n, tends toward a Gaussian whose width approaches zero as n increases, in accordance with the Law of large numbers.

Special cases

There is no general analytic solution for the form of f(x). There are, however three special cases which can be expressed in terms of elementary functions as can be seen by inspection of the characteristic function:[7][9][21]

- For the distribution reduces to a Gaussian distribution with variance σ2 = 2c2 and mean μ; the skewness parameter has no effect.

- For and the distribution reduces to a Cauchy distribution with scale parameter c and shift parameter μ.

- For and the distribution reduces to a Lévy distribution with scale parameter c and shift parameter μ.

Note that the above three distributions are also connected, in the following way: A standard Cauchy random variable can be viewed as a mixture of Gaussian random variables (all with mean zero), with the variance being drawn from a standard Lévy distribution. And in fact this is a special case of a more general theorem (See p. 59 of [22]) which allows any symmetric alpha-stable distribution to be viewed in this way (with the alpha parameter of the mixture distribution equal to twice the alpha parameter of the mixing distribution—and the beta parameter of the mixing distribution always equal to one).

A general closed form expression for stable PDFs with rational values of is available in terms of Meijer G-functions.[23] Fox H-Functions can also be used to express the stable probability density functions. For simple rational numbers, the closed form expression is often in terms of less complicated special functions. Several closed form expressions having rather simple expressions in terms of special functions are available. In the table below, PDFs expressible by elementary functions are indicated by an E and those that are expressible by special functions are indicated by an s.[22]

| 1/3 | 1/2 | 2/3 | 1 | 4/3 | 3/2 | 2 | ||

| 0 | s | s | s | E | s | s | E | |

| 1 | s | E | s | L | s | |||

Some of the special cases are known by particular names:

- For and , the distribution is a Landau distribution (L) which has a specific usage in physics under this name.

- For and the distribution reduces to a Holtsmark distribution with scale parameter c and shift parameter μ.

Also, in the limit as c approaches zero or as α approaches zero the distribution will approach a Dirac delta function δ(x − μ).

Series representation

The stable distribution can be restated as the real part of a simpler integral:[24]

Expressing the second exponential as a Taylor series, we have:

where . Reversing the order of integration and summation, and carrying out the integration yields:

which will be valid for x ≠ μ and will converge for appropriate values of the parameters. (Note that the n = 0 term which yields a delta function in x − μ has therefore been dropped.) Expressing the first exponential as a series will yield another series in positive powers of x − μ which is generally less useful.

For one-sided stable distribution, the above series expansion needs to be modified, since and . There is no real part to sum. Instead, the integral of the characteristic function should be carried out on the negative axis, which yields:[25][11]

Simulation of stable variables

Simulating sequences of stable random variables is not straightforward, since there are no analytic expressions for the inverse nor the CDF itself.[10][12] All standard approaches like the rejection or the inversion methods would require tedious computations. A much more elegant and efficient solution was proposed by Chambers, Mallows and Stuck (CMS),[26] who noticed that a certain integral formula[27] yielded the following algorithm:[28]

- generate a random variable uniformly distributed on and an independent exponential random variable with mean 1;

- for compute:

- for compute: where

This algorithm yields a random variable . For a detailed proof see.[29]

Given the formulas for simulation of a standard stable random variable, we can easily simulate a stable random variable for all admissible values of the parameters , , and using the following property. If then

is . For (and ) the CMS method reduces to the well known Box-Muller transform for generating Gaussian random variables.[30] Many other approaches have been proposed in the literature, including application of Bergström and LePage series expansions, see [31] and,[32] respectively. However, the CMS method is regarded as the fastest and the most accurate.

Applications

Stable distributions owe their importance in both theory and practice to the generalization of the central limit theorem to random variables without second (and possibly first) order moments and the accompanying self-similarity of the stable family. It was the seeming departure from normality along with the demand for a self-similar model for financial data (i.e. the shape of the distribution for yearly asset price changes should resemble that of the constituent daily or monthly price changes) that led Benoît Mandelbrot to propose that cotton prices follow an alpha-stable distribution with equal to 1.7.[6] Lévy distributions are frequently found in analysis of critical behavior and financial data.[9][33]

They are also found in spectroscopy as a general expression for a quasistatically pressure broadened spectral line.[24]

The Lévy distribution of solar flare waiting time events (time between flare events) was demonstrated for CGRO BATSE hard x-ray solar flares in December 2001. Analysis of the Lévy statistical signature revealed that two different memory signatures were evident; one related to the solar cycle and the second whose origin appears to be associated with a localized or combination of localized solar active region effects.[34]

Other analytic cases

A number of cases of analytically expressible stable distributions are known. Let the stable distribution be expressed by then we know:

- The Cauchy Distribution is given by

- The Lévy distribution is given by

- The Normal distribution is given by

- Let be a Lommel function, then:[35]

- Let and denote the Fresnel integrals then:[36]

- Let be the modified Bessel function of the second kind then:[36]

- If the denote the hypergeometric functions then:[35] with the latter being the Holtsmark distribution.

- Let be a Whittaker function, then:[37][38][39]

See also

Notes

- The STABLE program for Windows is available from John Nolan's stable webpage: http://www.robustanalysis.com/public/stable.html. It calculates the density (pdf), cumulative distribution function (cdf) and quantiles for a general stable distribution, and performs maximum likelihood estimation of stable parameters and some exploratory data analysis techniques for assessing the fit of a data set.

- libstable is a C implementation for the Stable distribution pdf, cdf, random number, quantile and fitting functions (along with a benchmark replication package and an R package).

- R Package 'stabledist' by Diethelm Wuertz, Martin Maechler and Rmetrics core team members. Computes stable density, probability, quantiles, and random numbers. Updated Sept. 12, 2016.

- Python implementation is located in scipy.stats.levy_stable in the SciPy package.

References

- Mandelbrot, B. (1960). "The Pareto–Lévy Law and the Distribution of Income". International Economic Review. 1 (2): 79–106. doi:10.2307/2525289. JSTOR 2525289.

- Lévy, Paul (1925). Calcul des probabilités. Paris: Gauthier-Villars. OCLC 1417531.

- Mandelbrot, B. (1961). "Stable Paretian Random Functions and the Multiplicative Variation of Income". Econometrica. 29 (4): 517–543. doi:10.2307/1911802. JSTOR 1911802.

- Mandelbrot, B. (1963). "The Variation of Certain Speculative Prices". The Journal of Business. 36 (4): 394–419. doi:10.1086/294632. JSTOR 2350970.

- Fama, Eugene F. (1963). "Mandelbrot and the Stable Paretian Hypothesis". The Journal of Business. 36 (4): 420–429. doi:10.1086/294633. JSTOR 2350971.

- Mandelbrot, B. (1963). "New methods in statistical economics". The Journal of Political Economy. 71 (5): 421–440. doi:10.1086/258792. S2CID 53004476.

- Nolan, John P. "Stable Distributions – Models for Heavy Tailed Data" (PDF). Retrieved 2009-02-21.

- Siegrist, Kyle. "Stable Distributions". www.randomservices.org. Retrieved 2018-10-18.

- Voit, Johannes (2005). Balian, R; Beiglböck, W; Grosse, H; Thirring, W (eds.). The Statistical Mechanics of Financial Markets – Springer. Texts and Monographs in Physics. Springer. doi:10.1007/b137351. ISBN 978-3-540-26285-5.

- Nolan, John P. (1997). "Numerical calculation of stable densities and distribution functions". Communications in Statistics. Stochastic Models. 13 (4): 759–774. doi:10.1080/15326349708807450. ISSN 0882-0287.

- Penson, K. A.; Górska, K. (2010-11-17). "Exact and Explicit Probability Densities for One-Sided Lévy Stable Distributions". Physical Review Letters. 105 (21): 210604. arXiv:1007.0193. Bibcode:2010PhRvL.105u0604P. doi:10.1103/PhysRevLett.105.210604. PMID 21231282. S2CID 27497684.

- Lihn, Stephen (2017). "A Theory of Asset Return and Volatility Under Stable Law and Stable Lambda Distribution". SSRN.

- Le Cam, L. (February 1986). "The Central Limit Theorem around 1935". Statistical Science. 1 (1): 78–91. JSTOR 2245503.

- Lévy, Paul (1937). Theorie de l'addition des variables aleatoires [Combination theory of unpredictable variables]. Paris: Gauthier-Villars.

- Gnedenko, Boris Vladimirovich; Kologorov, Andreĭ Nikolaevich; Doob, Joseph L.; Hsu, Pao-Lu (1968). Limit distributions for sums of independent random variables. Reading, MA: Addison-wesley.

- Nolan, John P. (2020). Univariate stable distributions, Models for Heavy Tailed Data. Springer Series in Operations Research and Financial Engineering. Switzerland: Springer. doi:10.1007/978-3-030-52915-4. ISBN 978-3-030-52914-7. S2CID 226648987.

- Gnedenko, Boris V. (2020-06-30). "10: The Theory of Infinitely Divisible Distributions". Theory of Probability (6th ed.). CRC Press. ISBN 978-0-367-57931-9.

- Gnedenko, Boris Vladimirovich; Kolmogorov, Andreĭ Nikolaevich (1968). Limit Distributions for Sums of Independent Random Variables. Addison-Wesley.

- Uchaikin, Vladimir V.; Zolotarev, Vladimir M. (2011-09-08). Chance and Stability: Stable Distributions and their Applications. Walter de Gruyter. ISBN 978-3-11-093597-4.

- Araujo, Aloisio; Giné, Evarist (1980). "Chapter 2". The central limit theorem for real and Banach valued random variables. Wiley series in probability and mathematical statistics. New York: Wiley. ISBN 978-0-471-05304-0.

- Samorodnitsky, G.; Taqqu, M.S. (1994). Stable Non-Gaussian Random Processes: Stochastic Models with Infinite Variance. CRC Press. ISBN 9780412051715.

- Lee, Wai Ha (2010). Continuous and discrete properties of stochastic processes. PhD thesis, University of Nottingham.

- Zolotarev, V. (1995). "On Representation of Densities of Stable Laws by Special Functions". Theory of Probability and Its Applications. 39 (2): 354–362. doi:10.1137/1139025. ISSN 0040-585X.

- Peach, G. (1981). "Theory of the pressure broadening and shift of spectral lines". Advances in Physics. 30 (3): 367–474. Bibcode:1981AdPhy..30..367P. doi:10.1080/00018738100101467. ISSN 0001-8732.

- Pollard, Howard (1946). "Representation of e^{-x^{\lambda}} As a Laplace Integral". Bull. Amer. Math. Soc. 52: 908. doi:10.1090/S0002-9904-1946-08672-3.

- Chambers, J. M.; Mallows, C. L.; Stuck, B. W. (1976). "A Method for Simulating Stable Random Variables". Journal of the American Statistical Association. 71 (354): 340–344. doi:10.1080/01621459.1976.10480344. ISSN 0162-1459.

- Zolotarev, V. M. (1986). One-Dimensional Stable Distributions. American Mathematical Society. ISBN 978-0-8218-4519-6.

- Misiorek, Adam; Weron, Rafał (2012). Gentle, James E.; Härdle, Wolfgang Karl; Mori, Yuichi (eds.). Heavy-Tailed Distributions in VaR Calculations (PDF). Springer Handbooks of Computational Statistics. Springer Berlin Heidelberg. pp. 1025–1059. doi:10.1007/978-3-642-21551-3_34. ISBN 978-3-642-21550-6.

- Weron, Rafał (1996). "On the Chambers-Mallows-Stuck method for simulating skewed stable random variables". Statistics & Probability Letters. 28 (2): 165–171. CiteSeerX 10.1.1.46.3280. doi:10.1016/0167-7152(95)00113-1. S2CID 9500064.

- Janicki, Aleksander; Weron, Aleksander (1994). Simulation and Chaotic Behavior of Alpha-stable Stochastic Processes. CRC Press. ISBN 9780824788827.

- Mantegna, Rosario Nunzio (1994). "Fast, accurate algorithm for numerical simulation of Lévy stable stochastic processes". Physical Review E. 49 (5): 4677–4683. Bibcode:1994PhRvE..49.4677M. doi:10.1103/PhysRevE.49.4677. PMID 9961762.

- Janicki, Aleksander; Kokoszka, Piotr (1992). "Computer investigation of the Rate of Convergence of Lepage Type Series to α-Stable Random Variables". Statistics. 23 (4): 365–373. doi:10.1080/02331889208802383. ISSN 0233-1888.

- Rachev, Svetlozar T.; Mittnik, Stefan (2000). Stable Paretian Models in Finance. Wiley. ISBN 978-0-471-95314-2.

- Leddon, D., A statistical Study of Hard X-Ray Solar Flares

- Garoni, T. M.; Frankel, N. E. (2002). "Lévy flights: Exact results and asymptotics beyond all orders". Journal of Mathematical Physics. 43 (5): 2670–2689. Bibcode:2002JMP....43.2670G. doi:10.1063/1.1467095.

- Hopcraft, K. I.; Jakeman, E.; Tanner, R. M. J. (1999). "Lévy random walks with fluctuating step number and multiscale behavior". Physical Review E. 60 (5): 5327–5343. Bibcode:1999PhRvE..60.5327H. doi:10.1103/physreve.60.5327. PMID 11970402.

- Uchaikin, V. V.; Zolotarev, V. M. (1999). "Chance And Stability – Stable Distributions And Their Applications". VSP.

- Zlotarev, V. M. (1961). "Expression of the density of a stable distribution with exponent alpha greater than one by means of a frequency with exponent 1/alpha". Selected Translations in Mathematical Statistics and Probability (Translated from the Russian Article: Dokl. Akad. Nauk SSSR. 98, 735–738 (1954)). 1: 163–167.

- Zaliapin, I. V.; Kagan, Y. Y.; Schoenberg, F. P. (2005). "Approximating the Distribution of Pareto Sums". Pure and Applied Geophysics. 162 (6): 1187–1228. Bibcode:2005PApGe.162.1187Z. doi:10.1007/s00024-004-2666-3. S2CID 18754585.